Mastering data transformation is essential to building successful Mule applications. DataWeave, one of the most critical features within the MuleSoft ecosystem, enables powerful and flexible data transformation, appearing in approximately 90% of Mule Flows.

However, its complexity – combined with being a proprietary MuleSoft language – presents significant challenges, even for experienced developers. Mapping complex data structures and writing accurate DataWeave scripts can be time-consuming and error-prone, especially for less technical users who must navigate intricate data formats. These challenges not only hinder developer productivity but also slow down integration processes.

To tackle this challenge, the new AI-powered feature automatically maps fields based on metadata and generates precise transformations from user-provided sample data, greatly enhancing developer productivity and accelerating integration processes.

The solution focuses on two key goals to improve the user experience and help users overcome the data transformation learning curve, maximizing its value:

- Intelligent script generation for automated field mapping: Automatically generate scripts using provided input and output metadata, leveraging field names and types to create straightforward mapping logic. The script handles direct field name matches, simple functional transformations, and basic calculations intuitively. Fields that cannot be auto-mapped are left blank for users to complete manually.

- Intelligent script generation for sample data-driven transformation: Generate scripts that precisely capture the intended transformations by analyzing detailed input and output samples provided by the user. These scripts deliver clear, readable logic that shows exactly how input fields are transformed, aggregated, and manipulated to produce the desired output.

Intelligent script generation for automated field mapping

The field mapping pipeline ingests metadata and format details from the input payload, attributes, variables, and expected output. Designed for simplicity and efficiency, the pipeline passes this metadata, along with specific instructions, to an augmentor. Then, an LLM processes this information to generate a field mapping script based on the structure and semantics of field names and types. The result is a DataWeave script that maps fields through a combination of direct matches, logical inferences, and basic transformations.

However, because metadata alone often lacks the context needed to fully capture user intent, the generated script may require refinement. For example, if the output metadata includes a field named “total number,” the model cannot reliably determine how to compute it without additional contextual clues from the input data.

Here is an example of metadata in the “application/json” format:27391657:

{

"type": "object",

"properties": {

"address": {

"type": "string"

},

"region": {

"type": "string"

}

},

"required": [

"address",

"region"

]

}Here is an example of a generated DataWeave script:

%dw 2.0

output application/json

import * from dw::core::Objects

---

{

"fullName": vars.userInfo.name,

"emailAddress": payload.user.contact.email,

"location": vars.userInfo.location

}Intelligent script generation for sample data-driven transformation

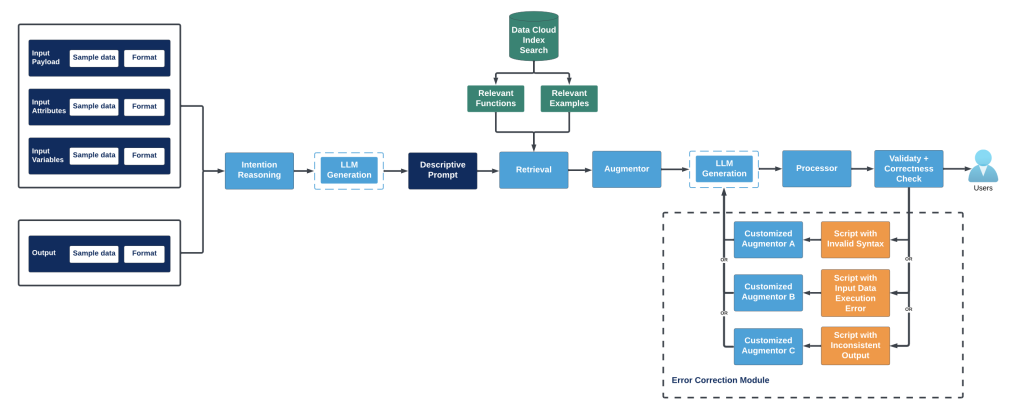

Unlike the metadata used in the automated field mapping pipeline, the sample data provides more detailed and comprehensive information on how each field should be transformed, facilitating the generation and evaluation of a more accurate DataWeave transformation script. To further enhance the quality of the generated script, we employ advanced AI techniques, such as retrieval and error correction, to ground the generation process and improve overall accuracy.

Input information

The transformation pipeline takes as input the user-provided sample data and formats of the input payload, input attributes, input variables, and output.

Here is an example of sample data in the “application/json”:

{

"user": {

"firstName": "John",

"lastName": "Doe",

"year_of_birth": 1999,

"contact": {

"email": "john.doe@example.com",

"phone": "+1-555-123-4567"

}

}

}Intention reasoning

Input data is first passed to an intention reasoning module, which uses the LLM to infer the functions or operations needed for the transformation, based on the input and output sample data.

Since the sample information primarily focuses on field values without providing high-level descriptions of the transformation process, it becomes less effective for retrieval. For example, a user may use any names, such as “John” and “Tracy,” to demonstrate the intent to concatenate the first and last names and convert them to uppercase. However, these specific values introduce significant variation in embeddings without conveying the overarching transformation logic.

To address this, an intention reasoning module powered by an LLM is employed to summarize the user’s overarching intent into a few concise natural language queries. This distilled summary serves as a roadmap, enabling the system to identify and retrieve relevant functions and examples that align with the user’s underlying goals.

For example, a possible reasoning prompt could look like this: the ‘fullName’ should be the concatenation of ‘firstName’ and ‘lastName’ from the input payload, and the ‘upper()’ function should be applied.

Retrieval and augmentation

Now the generated prompt from the reasoning module can be used for retrieval. The augmentor leverages the vector and keyword indexes built in the data cloud to retrieve relevant information and enrich the instruction before sending it to the Einstein Gateway.

Two major types of information are stored in the retrieval database:

- DataWeave function information: Extracted from the MuleSoft DataWeave website. Each unit contains the function name, package name, function description, one example, and its output.

| DataWeave function | Package | dw::core::Arrays |

| Function* | countBy | |

| Description* | Counts the elements in an array that return true when the matching function is applied to the value of each element. | |

| Example | %dw 2.0import * from dw::core::Arraysoutput application/json—{ “countBy” : [1, 2, 3, 4] countBy (($ mod 2) == 0) } | |

| Output | { “countBy”: 2 } |

- DataWeave examples: Provided by the labeling team with the assistance of AI. Each unit includes the name, dw_script, format, metadata, and sample data information for input payloads, attributes, variables, and output data. Additionally, instructions and explanations for the transformation script are included, which can be used for retrieval.

| DataWeave example | ID | |

| Name | ||

| dw_script | ||

| Instruction* | ||

| Explanation | ||

| Input payload | format | |

| metadata | ||

| sample data | ||

| Input attributes | format | |

| metadata | ||

| sample data | ||

| Input variables | format | |

| metadata | ||

| sample data | ||

| Output | format | |

| metadata | ||

| sample data | ||

The fields with asterisks* in the two tables are vectorized using a robust embedding model and stored in a retrieval database. When the intention reasoning module generates a prompt, it’s vectorized into a high-dimensional representation using the same embedding model. This vector is then compared against the vectors of DataWeave functions and examples to semantically retrieve the most relevant matches.

LLM generation

Third-party and open-source LLMs are compared by splitting the dataset into training (for fine-tuning and retrieval) and testing (for evaluation and benchmarking). The final model selection is based on a balance of accuracy and cost-efficiency.

- Third-party models: Several third-party models, including OpenAI’s GPT-3.5 and GPT-4o Mini, were evaluated for their strong performance and cost-efficiency balance.

- Fine-tuned Mistral Nemo 12B model: Compared to third-party models, the main advantage of open-source models is their lack of token consumption, offering enhanced flexibility for customization and control. For this exploration, we’ve selected Mistral Nemo 12B (Mistral-Nemo-Instruct-2407), a transformer-based model with 12 billion parameters released by Mistral AI in December 2024. This instruct-tuned version is trained on a large dataset that includes multilingual and code data. To enhance resource efficiency and speed up fine-tuning, we use Parameter-Efficient Fine-Tuning (PEFT) with QLoRA. This approach trains low-rank adaptation (LoRA) layers while preserving the pretrained knowledge of the base model. Additionally, QLoRA quantizes model parameters to 4-bit precision, significantly reducing GPU memory usage without sacrificing performance.

Post-processor

The post-processor model extracts the DataWeave script and its explanation from the LLM-generated response, based on the DataWeave script anatomy outlined below.

Validator and toxicity detection

Salesforce Einstein LLM Gateway provides several key metrics to help detect potentially toxic or biased responses, which can be leveraged to ensure safer generations. Any output that contains toxic or biased content, or fails the generation safety score, is automatically marked as invalid.

In addition to safety checks, thorough quality evaluation is conducted using two core metrics:

- Validity: The generated DataWeave script must compile successfully without syntax errors.

- Correctness: When the generated script is applied to the input sample data, the resulting output must match the expected output sample data.

Error correction module

The error correction module is designed to enhance the overall performance by identifying and correcting invalid DataWeave scripts. These scripts can fall into three categories of errors:

- Scripts with invalid syntax

- Scripts with invalid input data execute when the input sample cannot be processed by the script, despite the absence of syntax errors

- Scripts that produce an output that differs from the desired result (despite being syntactically valid and successfully processing the input data)

For each type of error, specific information is provided, and a custom augmentor tailored to address that particular error is constructed to facilitate error correction.

| Type of errors | What to do | Information to provide |

|---|---|---|

| Incorrect scripts with invalid syntax | Since the validator provides error messages for syntax errors, we supply the model with the previously generated script and the corresponding syntax error message to help the model focus on fixing syntax issues. | (1) incorrectly generated script. (2) error message. |

| Incorrect scripts with input data execution error | To help the model understand the cause of the execution error, we should provide the complete input data. Additionally, the error message from the validator can offer valuable insights into the issue. | (1) incorrectly generated script. (2) error message. (3) input data (4) desired output data. |

| Incorrect scripts with wrong executed output | Since the executed output from the incorrect DataWeave script is available, both this output and the desired output should be provided to the model. This allows the model to compare the two, analyze the differences, and infer the necessary adjustments to improve the previously generated script. | (1) incorrectly generated script. (2) executed output from the generated script. (3) input data. (4) desired output data. |

Simplifying data transformation with Generative AI in DataWeave

Mastering data transformation is essential for building efficient Mule applications, with DataWeave at the core of this process. By introducing intelligent, sample data-driven transformations and automated field mapping, this innovation accelerates development, minimizes errors, and empowers developers – including those with less technical expertise – to handle complex data structures with confidence. Ultimately, it enables users to unlock the full potential of DataWeave more easily and efficiently.