MuleSoft has long been a leader in helping enterprises build composable foundations through continuous innovations across integration, automation, and API management, with advancements like Universal API Management, DataWeave, and Anypoint Code Builder. Now, MuleSoft is further empowering these enterprises through their agentic and AI transformation, driving real-world actionability.

As part of this initiative, we’ve integrated new AI connectors, including the MuleSoft Inference Connector, seamlessly on the Anypoint Platform to provide a comprehensive and cohesive foundation for your digital transformation journey.

Connect, automate, and innovate with GPU-accelerated AI for your business data

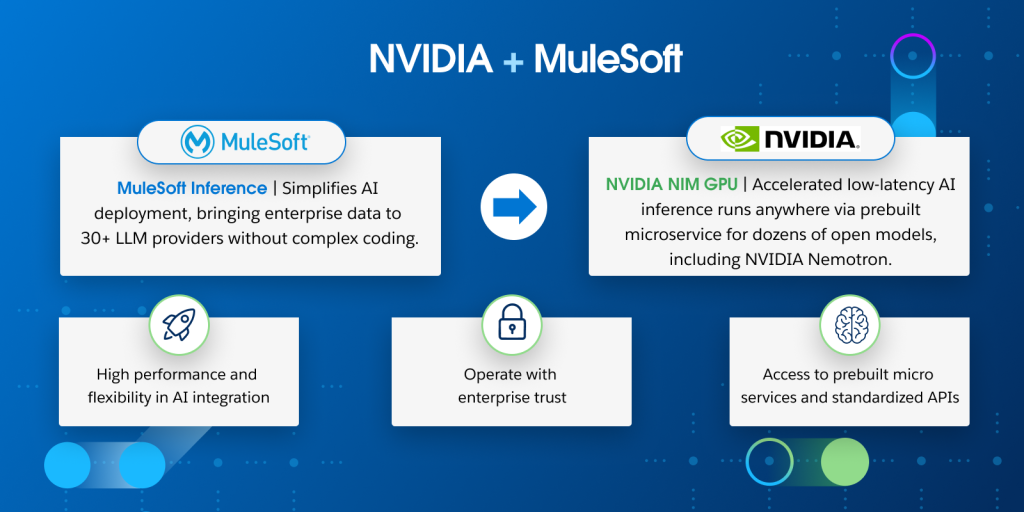

Unleash the full potential of your AI initiatives with a unified strategy, powered by MuleSoft’s collaboration with NVIDIA. By utilizing NVIDIA NIM microservices with the MuleSoft Inference Connector, you can now seamlessly bridge your enterprise data to dozens of GPU-accelerated LLM models, including the open NVIDIA Nemotron models.

This partnership enables users to build and scale secure, production-grade AI applications anywhere, from cloud to data center, with the combined benefits of world-class integration and high-performance computing. Explore the Connector on Anypoint Exchange.

“Enterprises are looking for secure, scalable ways to bring AI to their data – wherever it resides. Through the NVIDIA NIM microservice integration with the MuleSoft Inference Connector, developers can connect applications to optimized models and deploy AI faster across cloud and data center infrastructure.”

Pat Lee, VP, Strategic Enterprise Partnerships, NVIDIA

The new foundation for enterprise AI

MuleSoft empowers customers to build and orchestrate intelligent, automated experiences by securely connecting their enterprise data to the most powerful AI models. Our integration of NVIDIA technology combines the power of our MuleSoft Inference Connector with NVIDIA NIM microservices, a set of high-performance AI microservices. By combining these innovations, we address a key challenge in enterprise AI: secure, scalable “bridging the gap” between complex business data and the immense potential of generative AI.

What is the MuleSoft Inference Connector?

The MuleSoft Inference Connector, part of MuleSoft’s portfolio of AI connectors, is a secure and unified entry point to 30+ popular AI models and microservices like NVIDIA NIM and OpenAI. For developers, it abstracts away the complexity of diverse AI APIs, allowing them to use simple configurations to infuse generative AI into any application or workflow without being an AI expert.

What is NVIDIA NIM microservices?

NVIDIA NIM microservices provide pre-optimized models and industry-standard APIs for building powerful AI agents, co-pilots, chatbots, and assistants. NIM microservices make it simple to deploy GPU-accelerated AI on-premises, in the cloud, or on workstations, supporting low-latency and high-throughput results.

NVIDIA NIM supports hundreds of open models, including the NVIDIA Nemotron model family which excels in graduate-level scientific reasoning, advanced math, coding, instruction following, tool calling, visual reasoning, and retrieval-augmented generation (RAG). Learn More about NVIDIA NIM and how to get started with NVIDIA NIM for LLMs.

The collaboration between MuleSoft and NVIDIA bridges the gap between complex enterprise systems and world-class, GPU-accelerated AI. By connecting MuleSoft with NVIDIA NIM, you can infuse high-performance AI directly into any workflow, transforming business processes without custom code or specialized AI expertise.

Accelerate your AI transformation with MuleSoft and NVIDIA NIM

Unlock new levels of productivity and innovation by bringing state-of-the-art AI to your data, wherever it resides. The MuleSoft Inference Connector, combined with NVIDIA NIM microservices, is engineered for maximum performance, choice, and control.

The Inference Connector is currently compatible with any NIM deployed in production, provided the following requirements and capabilities are met:

- Model type: Any LLM model supported by NIM

- Authentication: Basic authentication with API keys

- Endpoints: Custom base URLs are supported, as long as the operation URI remains same

- Payload: Custom request attributes can be passed in addition to the standard request payload supported by the connector

| Build Complete AI Agents Faster | Achieve High Performance and Flexibility | Operate With Enterprise Trust |

|---|---|---|

| MuleSoft’s Inference Connector provides the high-performance, lightweight gateway to NIM accelerated engines like NVIDIA TensorRT-LLM, giving you ultimate control over where your data and AI models run, without sacrificing speed. | MuleSoft’s hybrid runtime allows you to connect to systems anywhere. This is perfectly matched by the flexibility of NVIDIA NIM microservices, which can be deployed anywhere: cloud, data center, or on an NVIDIA RTX AI PC. | MuleSoft enforces security and governance across all AI integrations with trusted policies for access control and threat protection. Combined with enterprise-grade NIM microservices, secured and maintained by NVIDIA, your AI infrastructure is powerful, compliant, and fully controlled. |

Transform your business with intelligent automation

This powerful collaboration unlocks transformative, high-performance use cases across the enterprise. By utilizing the MuleSoft Inference connector, you can connect to specialized NVIDIA NIM AI capabilities and move beyond generic chatbots to build sophisticated, domain-specific solutions.

Intelligent IT operations (AIOps)

Using MuleSoft, connect real-time event logs from sources like Splunk and Datadog, then send that data via the Inference Connector to NVIDIA NIM to perform automated root cause analysis, summarize complex issues, and generate remediation code, drastically reducing resolution time.

Enterprise copilots and assistants

With MuleSoft orchestrating data from your CRM and ERP systems, you can build powerful custom copilots. The Inference Connector sends this rich contextual data to NVIDIA NIM, enabling advanced RAG-powered chatbots and domain-specific assistants for finance and HR.

Advanced RAG and knowledge discovery

Create a true knowledge engine by using MuleSoft to retrieve and prepare data from disparate sources like SharePoint and Salesforce. The Inference Connector combined with MuleSoft’s Vector connector then sends this curated context to NVIDIA NIM to query and synthesize information, powering complex analysis of legal documents or research papers with speed and accuracy.

Developer and content acceleration

Boost productivity by connecting your CI/CD tools to NVIDIA NIM through MuleSoft. The Inference Connector can send code snippets for analysis and debugging, or pull product data from your PIM system to have an LLM NIM automatically generate marketing copy and technical documentation.

The power of the platform: A collaboration for a new era of AI

This collaboration combines the best of enterprise integration with world-class AI performance to create a solution that is greater than the sum of its parts.

MuleSoft provides the foundation for enterprise AI. MuleSoft unlocks and prepares your business-critical data from any system. Our MuleSoft AI Connectors, including the Inference and Vector connectors, provide the integration fabric to securely build, govern, and manage the entire lifecycle of your AI agents.

NVIDIA delivers world-class AI performance. NVIDIA NIM supercharges this foundation by providing access to a broad range of community and custom fine-tuned models. These are packaged as secure, pre-built microservices and optimized with accelerated engines for high performance on NVIDIA GPUs.

Together, they provide a secure, scalable, and high-performance solution for embedding custom AI into the heart of your business operations.

The future of AI is now – build it on MuleSoft with NVIDIA

Don’t just talk about AI – deploy it where you need it. The MuleSoft Inference Connector with NVIDIA NIM empowers your organization to build smarter, faster, and more responsive business processes using the best of enterprise integration and GPU-accelerated AI. Explore the Inference Connector on MuleSoft Anypoint Exchange and start your journey toward intelligent automation.

Editor’s note: This article was collaboratively written by Varun Maker, Sonya Wach, and Nivetha Vijayasanan.