Hi all, in this post I wanted to introduce you to how we are thinking about integration patterns at MuleSoft. Patterns are the most logical sequences of steps to solving a generic problem. Like a hiking trail, patterns are discovered and established based on use. Patterns always come in degrees of perfection with much room to optimize or adopt based on the needs to solve business needs. An integration application is comprised of a pattern and business use case. You can think of the business use case as an instantiation of the pattern, a use for the generic process of data movement and handling. In thinking about creating templates, we had to first establish the base patterns, or best practices, to make templates atomic, reusable, extensible, and easily understandable.

When thinking about the format of a simple point to point, atomic, integration, one can use the following structure in communicating a Mule application:

For example, you may have something like:

- Salesforce to Salesforce – Contact – Migration

- Salesforce to Netsuite – Account – Aggregation

- SAP to Salesforce – Order – Broadcast

In this mini-series of posts, I will walk through the five basic patterns that we have discovered and defined so far. I am sure that there are many more, so please leave a comment below if you have any ideas on additional patterns that may be valuable to provide templates for.

Pattern 1: Migration

What is it?

Migration is the act of moving a specific set of data at a point in time from one system to the other. A migration contains a source system where the data resides at prior to execution, a criteria which determines the scope of the data to be migrated, a transformation that the data set will go through, a destination system where the data will be inserted and an ability to capture the results of the migration to know the final state vs the desired state. Just to disambiguate here, I am referring to the act of migrating data, rather than application migration which is the act of moving functionality capabilities between systems.

Why is it valuable?

Migrations are essential to any data systems and are executed extensively in any organization that has data operations. We spend a lot of time creating and maintaining data, and migration is key to keep that data agnostic from the tools that we use to create it, view it, and manage it. Without migration, we would be forced to lose all data that we have amassed anytime that we wanted to change tools, and this would cripple our ability to be productive in the digital world.

When is it useful?

Migrations will most commonly occur whenever you are moving from one system to another, moving from an instance of a system to another or newer instance of that system, spinning up a new system which is an extension to your current infrastructure, backing up a dataset, adding nodes to database clusters, replacing database hardware, consolidating systems, and many more.

What are the key things to keep in mind when building applications using the migration pattern?

Here are some key considerations to keep in mind:

Triggering the migration app:

It is a best practice to create services out of any functionality that will be shared across multiple people or teams. Usually data migration is handled via scripts, custom code, or database management tools, that developers or IT operations will create. One neat way to use our Anypoint Platform is to capture your migration scripts or apps as integration apps. The benefit of this is that you can easily put an http endpoint to initiate the application which means that if you kick off the integration using by hitting a URL you can get a response in your browser, and if you kick off the migration programmatically you can get back a JSON or any time of response you configure and do additional steps with it. You can also take our migration templates to the next level by making the configuration parameters parameters you pass into the API call. This means that you can do things like build a service to migrate your Salesforce account data either on command or expose an API that can grab a scoped dataset and move it to another system. Having services for common migrations can go a long way in saving both your development team and operations team a lot of time.

Pull or push model:

In general, you have two options on how to design your application. With migration, you will usually want execute it at a specific point in time, and you want it to process as fast as possible. Hence, you probably will want to use a pull mechanism which is the equivalent of saying “let the data collect in the origin system until I am ready to move it, once I am ready, pull all the data that matches my criteria and move it to the destination system.” Another way to look at the problem is to say “once a specific event happens in the origin system, push all the data that matches a specific criteria to a processing app that will then insert it into the destination system” The issue with this latter approach comes in the fact that the origination system needs to have an export function which is initiated to push the data, and it needs to know where the data will be pushed to, which implies programming this functionality into the originating application. Hence it is much simpler to use a pull (query) approach in migration because the application pulling the data out, like a mule application, will be much easier to create than modifying each origin system that will be used in migrations.

Scoping the dataset:

One of the major problems with accumulating data is that over time data with varying degrees of value will accumulate. Migrations, like switching a home, is a perfect time to clean up shop, or at least move only the valuables to the new destination. The issue is that the person who is usually executing the migration does not know which data is valuable vs a waste of bits. The usual solution is to just move everything which tends to increase the scope of migrations in an effort to preserve unknowingly worthless structures, relationships, and objects. As the person executing the migration, I would recommend that you work with the data owner to scope down the set of data that should be migrated. If you use a Mule application, like the ones provided via our templates, you can simply write a query in the connector which specifies the object criteria and which fields will be mapped. This can reduce the amount of data in terms of number of records, objects, object types, and fields per object hence saving you space, design, and management overhead. The other nice thing about making it a Mule application is that you can create a variety of inbound flows (the ones that trigger the application), or parameterize the inputs so that you can have the migration application behave differently based on the endpoint that was called to initialize it, or based on the parameters provided in that call. You can use this as a way to pass in the scoping of the data set or behave differently based on the scope provided.

Snapshots:

Since migrations are theoretically executed at a point in time, any data which is created post execution will not be brought over. To synchronize the delta (additional information) you can use a broadcast pattern – something I will talk about in a future post. Its important to keep in mind that data can still be created during the time that the migration is running unless you prevent that, or create a separate table where new data is going to be stored. Generally its is best practice to run migrations while the database is offline. If its a live system that cannot be taken offline, then you can always use the last modified time (or some equivalent for the system you are using) in the query to grab all the data which was created since the execution of the data.

Transforming the dataset:

Other than for a few exceptions like data backup/restore, migrating to new hardware, etc… usually you will want to modify the formats and structures of data during a migration. For example, if you are moving from an on premise CRM to one in the Cloud, you will most likely not have an exact mapping between the source and destination. Using an integration platform like mule is beneficial in the sense that you can easily see and map the transformations with components like DataMapper. You may also want use different mappings based on the data objects being migrated so having the flexibility to build logic into the migration can be very beneficial, especially if you would like to reuse it in the future.

Insert vs update:

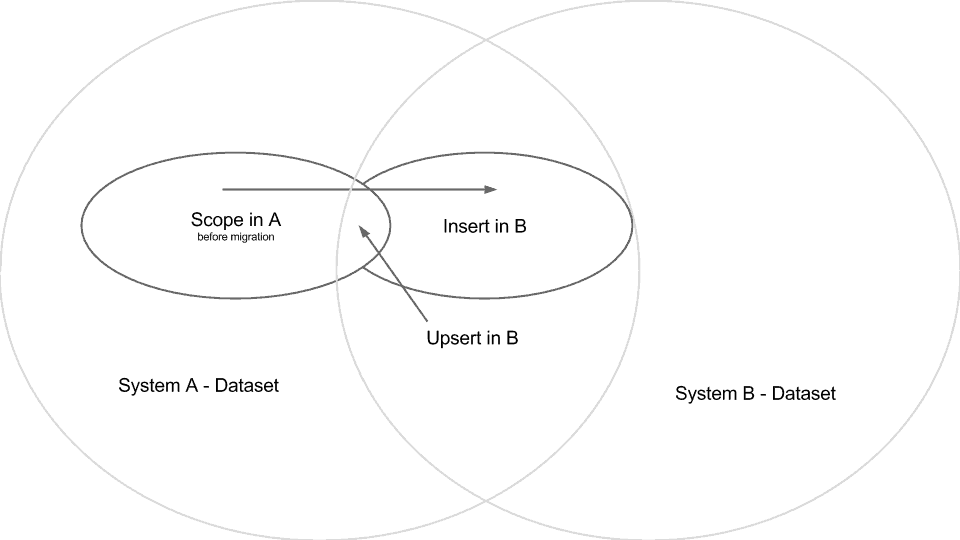

When writing a migration app or customizing one of our templates, especially one that will be run multiple times against the same data set and/or the same destination system, you should expect to find cases where you need to create new records as well as modify existing records. All of our migration templates come with logic which checks to see if this record already exists, and if so it’s an update operation, otherwise it will be a create operation. A unique field, or set of fields are immutable or don’t change often are the best approach to ensuring that you are dealing with the same object in both the source and destination systems. For example, an email is an ok unique field when dealing with uniqueness of people in many cases because it’s unlikely to change and represents only one person at any given time. However, when working with a HR system, or customers, you will probably want to check the existence of multiple fields like SSN, birthdate, customer ID, Last Name, things that will not change. Intelligently picking the right fields is particularly important when you are going to have multiple migrations populate a new system with data from a variety of systems, especially if the new system is going to serve as a system of record for the records stored in it. Duplicate or incomplete entries can be extremely costly so having a solution for testing if a record already exists and modifying it rather than creating a duplicate can make a huge difference. Having the update logic in the migration will also allow you to run the migration multiple times to fix errors that arose in previous runs of the migration. Salesforce provides a nice upset function to reduce the need to have separate logic to deal with an insert vs update, but in many cases systems will not have such a function which means that you will need to build that logic into the integration application.

To summarize, migration is the one time act of taking a specific data set from one system and moving it to another. Creating an application using the Anypoint Platform for the purpose of migrations is valuable because you can make it a reusable service, have it take input parameters which affect how the data is processed, scope the data that will be migrated, build in processing logic before the data is inserted it into the destination system, and have the ability to create vs update so that you can more easily merge data from multiple migration sources or run the same migration multiple times after you fix errors that arise. To get started building data migration applications you can start with one of our recently published Salesforce to Salesforce migration templates. These templates will take you a fair way even if you are looking to build a X to Salesforce or a Salesforce to X migration application.

In the next post, I will walk you through similar types of considerations for the broadcast pattern – stay tuned!