You probably heard we have been moving into a faster release cadence with the new mountain ranges releases in this post and this one. For many Product Managers or Business Owners releasing faster could be the difference between success and failure. Being able to shorten the cycle between an idea and valuable user feedback enables new companies to understand better market needs and improve based on it. Releasing valuable software earlier is the sweet spot for Agile and Lean methodologies.

Being able to release faster, it is not just pushing code to production. I will cover how we get to the point to release faster Mule, the leading ESB. What challenges we faced and the places we have been focusing in the last 2 years. Yes, you heard it right, 2 years! Releasing faster is just one of the consequences of the work we have been doing on the ESB for a long time and not just a random request from Product Management. We have millions automated test executed to guarantee a release of mule in 2 months! Keep reading to find out how this happened.

Drivers for change

Two years ago we had about 5000 automated tests executed by our CI environment. It was not a bad initial state, but there was a lot of waste in our builds and development process. We had 5 different builds, for different integration environments with multiple DBMSs, JMS providers, email servers, FTP servers and so on. We had 1 build triggered by a commit and then all the others chained one after the other. In most cases we had to wait over a day for the builds to finish with our changes. It was hard for us to keep track if one of those builds was actually building our change, or it was still building a previous commit. If something got broken in the middle after a day we committed, we had to start researching which commit actually broke things, increasing more and more the effort and time to make a change in the ESB. We didn’t feel comfortable without a quick feedback to the developers if the changes were ok or not and changes taking a long time to go through our process. Feeling that pain over and over again was the trigger for us to change the way we build and develop Mule.

The commit stage

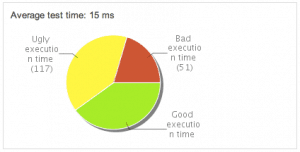

We created a pipeline for our builds, and we have a first commit stage, with the goal of running all unit tests and build all the binaries in less than 10 minutes. This is our quick feedback stage to know if changes are good. We marked which of all our JUnit tests was proper unit tests to belong to this build. We started using the @SmallTest annotation to separate them. Our commit stage still was taking too long, as SureFire scanned the whole project before executing a test. We created our own extension of the SureFire plugin to execute the tests as they are found. That reduced the build time considerably to about 7 minutes executing 1100+ unit tests we have today. We also needed a way to validate that we only have fast tests in this build so we created a Sonar plugin that displays the time each test take for this unit tests, and put them in a graph. We named it, “The good, the bad and the ugly“. Improving the tools we used has been key for us to meet our goals.

The acceptance stage

They were 4 builds, each one taking 2 or 3 hours and all triggered by single commits. We found ourselves stopping many of this builds, and executing them manually to be sure it was building our latest changes. If there was a problem, it was a bummer to find which of all the changes actually caused the problem.

We started working by reviewing all the acceptance tests, and making sure they were not doing unnecessary work. We found there over a thousand tests initialising many things taking longer than needed. We created a new hierarchy of base tests with different scenarios reducing a lot of waste in those tests. After tuning all our tests we reduced the time to 1 hour for each build. We now run them concurrently and take in total about 75 minutes to execute 8000 automated tests. The acceptance stage will run with the latest successful commit stage, reducing also the total time we wait for our changes to be exercised. After the acceptance test we already executed over 9000 automated tests for the ESB.

Nightly builds

We also have a few more nightly builds that executes the 10,000+ automated tests on different JVMs and platforms. On a nightly cadence we update our Sonar metrics, and execute more long running builds, like the HA and QA automated test suite with larger applications samples. At this point, we can be sure that everything works as expected when we make changes.

The Results

During this process, we doubled the amount of automated tests we execute on the ESB for each commit. We are true believers that automation of our tests is the only way to improve.

The work on automation and reducing waste in our development process lead to many benefits, releasing faster is one of them. We also went from an average of escalated bug to engineering from support 1 bug every 6 support cases in 2011, to an average of 1 bug every 20 support cases in 2013. We reduced the cost of maintaining each version of the ESB, reduced the time to create a patch for our customers, we improved the code base considerably, higher quality perceived by our customer and reduced time to market for new features. Only adding quality in less time each step allows you to release faster. The longer you wait, the longer and harder it will be to get back on track.