There is a lot of talk about the lean startup and whether it works or not. Some proclaim it is critical to the success of any startup and that it is even the DNA of any modern startup. Others claim that it’s unproven, unscientific and gets your product to market in a haphazard way that is ungrounded in quality.

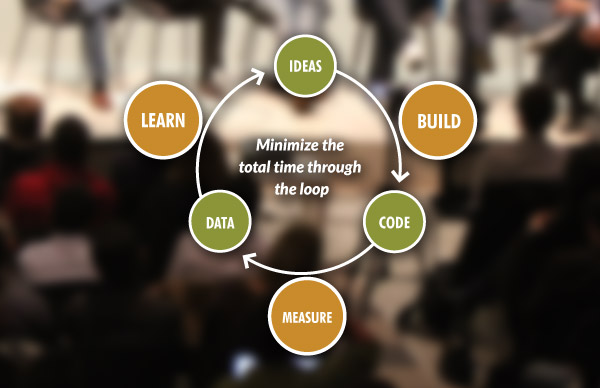

But the lean startup model, when you boil it down, simply says that when you launch any new business or product you do so based on validated learning, experimentation and frequent releases which allow you to measure and gain valuable customer feedback. In other words, build fast, release often, measure, learn and repeat.

Real World Example: WebVan vs Zappos

Sometimes the best way to look at the lean startup approach is through examples.

WebVan went bankrupt in 2001 after burning through $1 billion on warehouses, inventory systems and fleet delivery trucks. Why? They didn’t validate their business model before investing so much in it and under-estimated the “last mile” problem.

Contrast this to Zappos. Zappos could have gone off and built distribution centers and inventory systems for shipping shoes. But instead Zappos founder Nick Swinmurn first wanted to test the hypothesis that customers were ready and willing to buy shoes online. So instead of building a website and a large database of footwear, Swinmurn approached local shoe stores, took pictures of their inventory, posted the pictures online, bought the shoes from the stores at full price, and sold them directly to customers when purchased through his website. Swinmurn deduced that customer demand was present, and Zappos would eventually grow into a billion dollar business based on the model of selling shoes online.

Guess who took the lean startup approach?

Lean Startup Principles

Lean Startup methodology is based on a few key simple principles and techniques:

- Create a Minimum Viable Product (MVP) which is feature-rich enough to allow you to market test it. This doesn’t mean that the product is inadequate or of poor quality, it means that you launch with enough features that allow you to collect the maximum amount of data.

- Use a Continuous delivery model to release new features quickly with the least amount of friction and short cycles in between.

- A/B test different versions of the same feature on different user segments to gain feedback on which is more valuable or easier to use.

- Act on metrics meaning that if you can’t measure it you can’t act on it to ensure that you are always improving the product.

Lean Startup Engineering

Lean Startup engineering seems to work for consumer products. Facebook does it – they push new code to their platform at least twice a day and this includes changes to their API, which has over 40,000 developers using it for building apps. But what if I’m building an enterprise product or platform? Can I move at the same fast pace? Absolutely.

There are lots of product companies out there who have applied the lean startup model successfully including DropBox, IMVU and Etsy. I’ve also been involved in many startups and I’ve seen the lean startup model work. I think the engineering philosophy behind it makes total sense – move fast, build quickly, automate testing, validate your decisions through data, leverage open source when you can, build MVPs and get as close to continuous deployment as you can. Not only does it make sense, it’s also a fun and enjoyable way for engineers and product teams to work together.

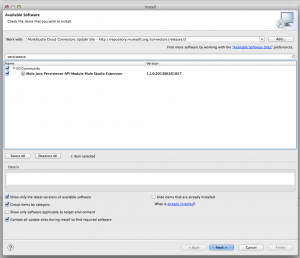

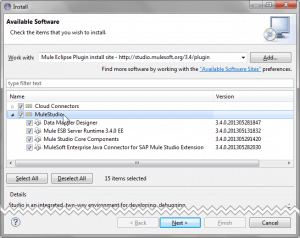

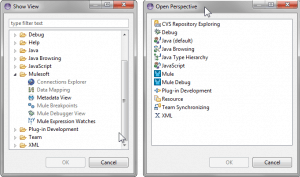

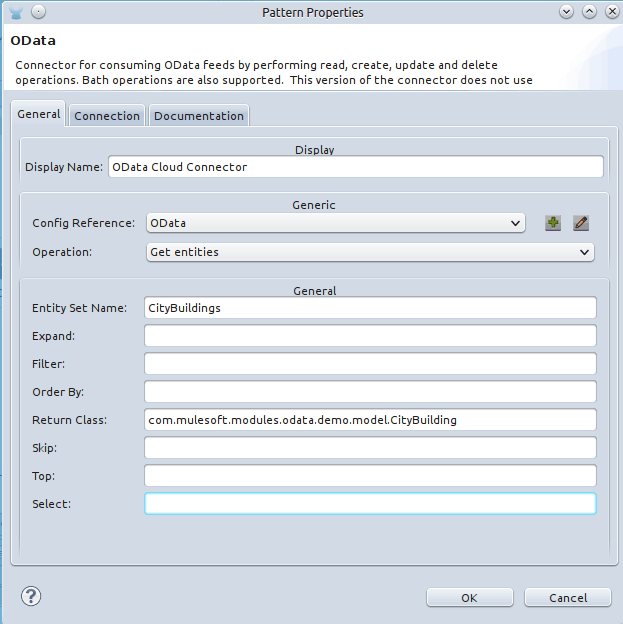

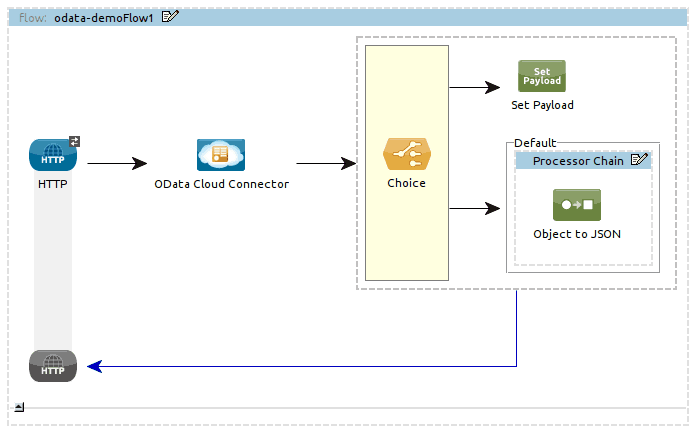

How We Apply It At MuleSoft

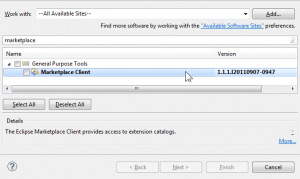

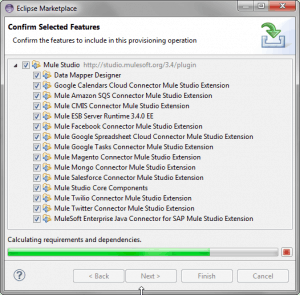

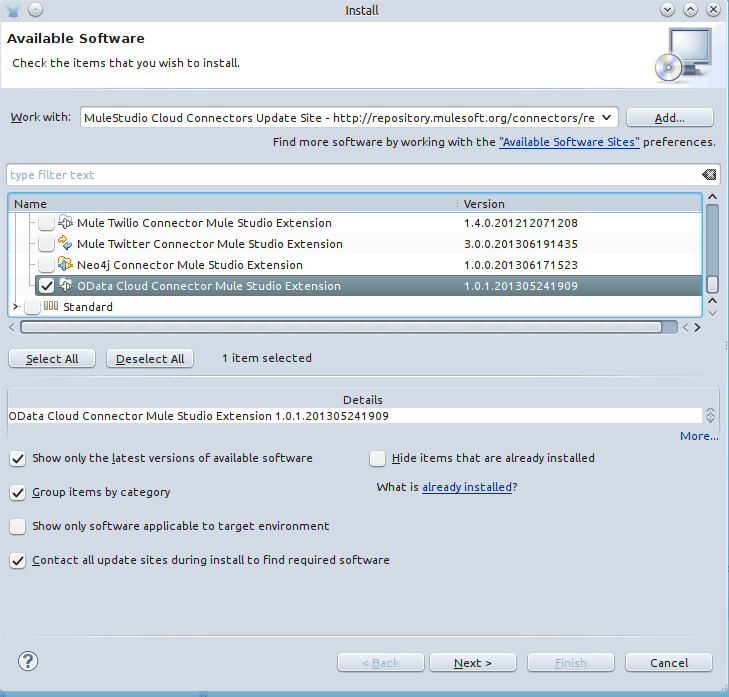

MuleSoft is no longer a start up, but as high growth company, we’re releasing new software and features at a very fast pace. This stems from our open source foundation of releasing early and validating with our community. Today we have kept that culture – all our teams use agile development, iterate quickly and make builds available every night for other teams to try out and provide feedback on. We beta test new features with early adopters and our community to gain valuable feedback before getting too far into development. When we launch a new product we define an MVP – focusing on a well-defined set of customer needs and expand the capabilities base on value to users without bloating a product with unnecessary features. We continually release products internally and then we release new versions to our customers every 1-2 months which is pretty much unheard of in the enterprise software space. Having a Cloud Platform also means we can push silent updates at a much faster pace. To do all of this you need to have a solid automated testing process in place and system health monitoring in order to roll back changes if any issues are identified.

We think the approach we take is a win-win for us and our customers. Happy iterating…