Back in the old days when I used to write SaaS integration apps for living (long time ago, like 2 months back…) I always found it somehow difficult to reconcile large datasets with the Anypoint Cloud Connectors. Don’t get me wrong, I love those connectors! They solve a lot of issues for me, from actually dealing with the API to handle security and reconnection. However, there’re use cases in which you want to retrieve large amounts of data from a Cloud Connectors (let’s say retrieve my 600K Salesforce contacts and put them in a CSV file). You just can’t pass that amount of information in one single API call, not to even mention that you’ll most likely won’t even be able to hold all of those contacts in memory. All of these puts you in a situation in which you will need to get the information in pages.

So, is this doable with Anypoint Connectors? Totally. But your experience around that would be a little clouded because:

- Each connector handles paging its own way (the API’s way)

- It requires the user to know and understand the underlying API’s paging mechanism

- Because Mule semantics follow the Enterprise Integration Patterns, there’s no easy way to write a block like “do this while more pages are available”

Solving the problem

So we sat down to discuss this problem and came to the conclusion that we needed to achieve:

- Usage Consistency: I don’t care how the API does pagination. I want to paginate always the same way, no matter the connector

- Seamless Integration: I want to use DataMapper, ForEach, Aggregator, Collection Splitter, etc. without regards of the connector’s pagination

- Automation: I want the pagination to happen behind the scenes… Streaming FTW!

- size(): I want to be able to know the total amount of results even if all pages haven’t been retrieved yet

- When do we want it?: In the October 2013 release!

Two Stories, One Story

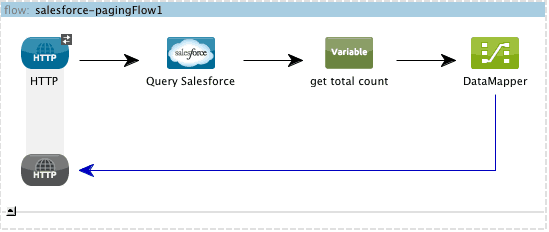

Consider an application that takes your Salesforce Contacts and transform them to CSV. As we said earlier, this is easy if you have an amount of contacts that would fit into memory and into the limits of the underlying API (salesforce doesn’t return more than 1000 objects per query operation). However, since the Salesforce Connector now supports auto-paging, the flow looks as simple as this no matter how large the dataset:

Wait a minute? I don’t see the paging complexity in that flow! EXACTLY! The connector handled it for you! Let’s take a closer look at the query operation:

As you can see there’s a new section now called paging with a Fetch Size parameter. This means that the operation supports auto paging and that it will bring your information in pages of 1000 items. So, you make the Query and use DataMapper to transform it into a CSV. It doesn’t matter if you have 10 million Salesforce Contacts, the auto paged connector will pass them one by one to DataMapper so that it can transform it. Because the whole process is using streaming behind scenes, you don’t have to worry about how Salesforce’s pagination API looks like nor about running out of memory.

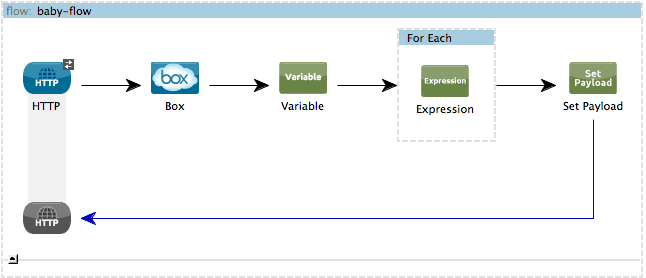

Now let’s take a look at this other app. This one uses the Box connector to get into a Box folder that has AN ENORMOUS amount of pictures. Suppose that it has all the pics I ever took from my daughter ever (I had to bought an external Hard Drive just for that). So I want to get a list of all those files and make a list of them, but this time instead of DataMapper we’ll use ForEach and and expression component, just because I feel like it. This time the app looks like this:

If you compare this flow to the one before you’ll notice that they’re as different as they can be:

- One uses DataMapper the other one uses a ForEach

- One uses OAuth the other one connection management

- Salesforce does paging using server side cursors

- Box does paging with a limit/offset

However, the paging experience is exactly the same:

So in summary, although Box’s and Salesforce’s paging mechanisms are as different as they get, you can just use the connectors and write your flows without actually caring about the size of the data sets and the mechanics involved, while always maintaining the same development experience.

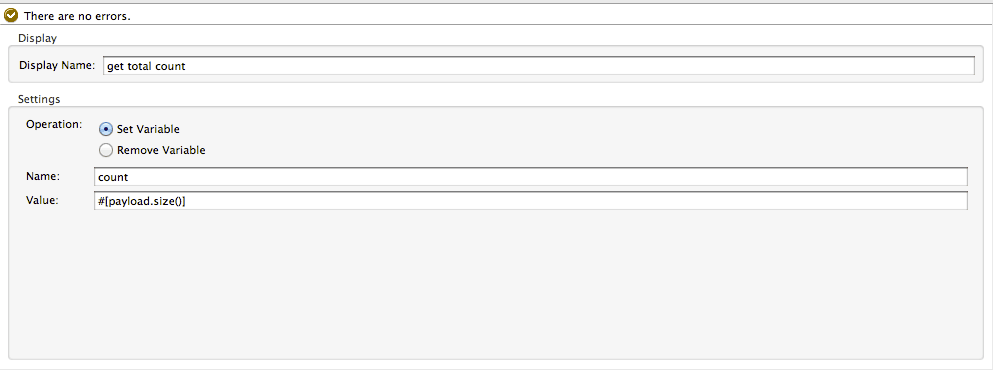

What’s that variable?

You probably noticed that in both examples there’s a variable processor just after the query processor. In case you’re wondering, that variable is completely useless. You don’t need to have it. Why is it in the example then? Just because I wanted to show you that you can get the total size of the dataset even if you still haven’t started to consume all the pages:

Where can I use it?

Next to Mule October 2013 release, new versions of these connectors were released now supporting auto paging:

- Box Connector

- Google Calendar Connector

- Google Contacts Connector

- Google Tasks Connector

- Marketo Connector

- NetSuite Connector

- QuickBooks Connector

- Salesforce.com Connector

- Zuora Connector

Remember you can install any of them from Mule Studio’s connectors update site.

Can you share use cases in which this feature would make your life easier? Please share with us so that we can keep improving. Any feedback is welcome!