Previously, OneMain Financial shared how it successfully implemented a C4E. In part two of this series, Chris Spinner, Software Development Manager, dives into how they built an omnichannel customer notification framework using MuleSoft.

I was involved with our API-led design approach over the last two years and, as discussed in our previous blog, it is really more of a journey rather than simply adopting a toolset or platform. The most important thing and most critical to your success in this journey is for the entire technology team and your business partners to be on board and invested in this new approach. This is the only way to maximize the benefit and realize the full potential of this type of API-led approach.

In this blog, I’m going to walk you through OneMain Financial’s approach to the digital customer notification process, which is just one of the many use cases we’ve developed within Anypoint Platform. I will also cover the business needs that necessitated the development of an enterprise strategy on customer communications, and how we used Anypoint Platform to evolve our architecture over the past couple of years to meet specific demands.

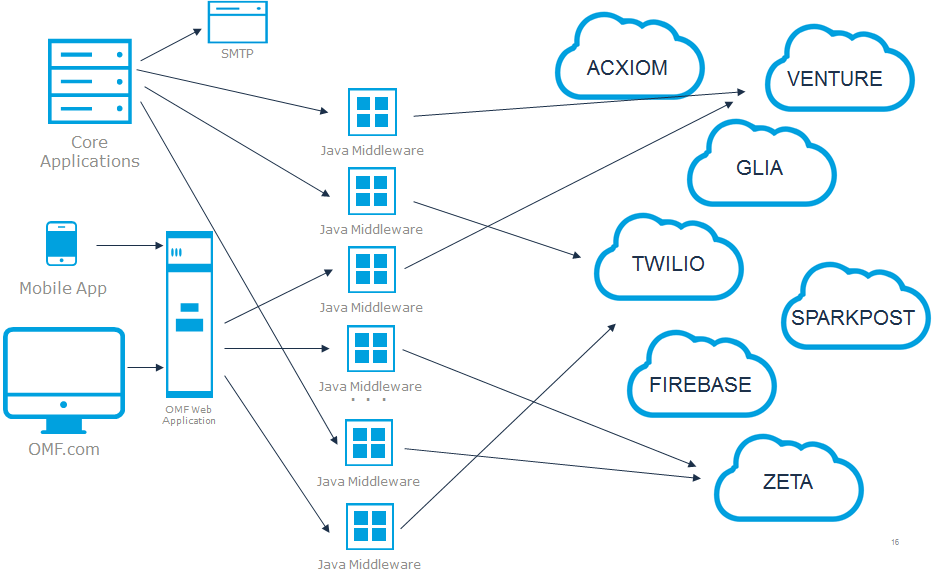

OneMain currently leverages multiple vendors across different types of electronic communication channels. The onboarding of these vendors provided the needed capability required by the different lines of business to meet all the different demands of that particular time.

As recently as two years ago, our vendor integrations looked as depicted below for customer notifications. They’re point-to-point in nature and require significant custom code.

Additionally, we developed applications for specific use cases and for specific vendors, so there wasn’t a clearly defined strategy for enterprise communications. This environment allowed for individual projects to define their own architecture and resulted in duplicate efforts. Ultimately, this caused inconsistencies in our ability to support these services, especially with an increase in volume of communications taking place between OneMain systems and our customer base. We needed to step back and evaluate our landscape from an enterprise perspective, particularly as this digital landscape expanded to include more vendors and channels.

This drove us to define our enterprise strategy including how we can best design solutions that leverage reusability, mitigates risk, as well as save costs as we continue to evolve our strategy.

It was also critical for us to understand each use case and the many ways a different customer could be engaged. For example, as these use cases focused on marketing for insurance, origination, and collections, we also had to consider operational type initiatives that provide the customer with one means of entry into communication over a variety of different channels. Thus, our architecture needed to be flexible enough to reach out directly to our customers in any channel as needed.

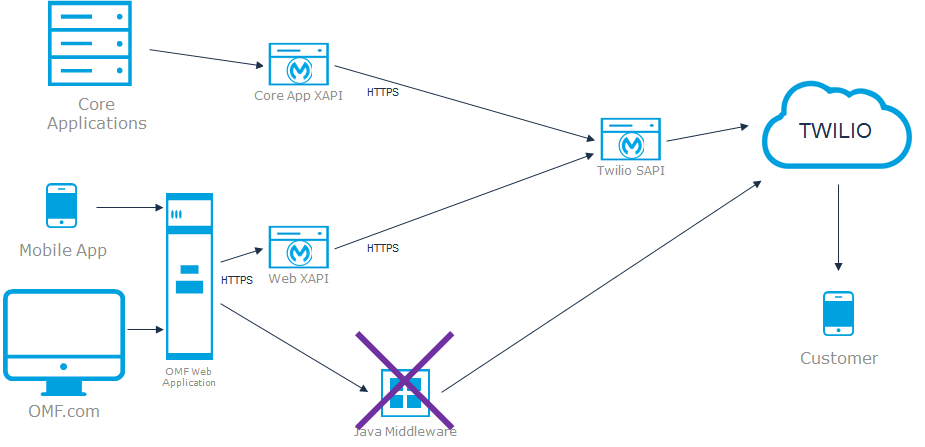

Our MuleSoft integration for notifications has even been evolving over the past year. Our first real use case leveraged our Twilio integration and it was for a new alert system for funding notifications. We had recently started offering direct deposit as an option to receive your funds upon booking a loan. Thus, when you successfully received your direct deposit, this function was originating from an application residing in one of our core systems. This transaction is delivered onto a queue containing the destination phone number and the contents of the message.

This queue is monitored by a listener implemented within an experience API designed specifically for that application, and the experience API makes an http request to a system API to send a message with Twilio. We developed a Twilio-specific system API that’s configured with all of OneMain’s account details and — while it’s very specific to OneMain — it provides a generic restful interface for any higher layer API that wishes to consume it.

We then converted other alerts such as our one-time passcode notification to Mule from our legacy services. Even though the triggers of these transmissions were completely different business processes, we were still able to reuse that same system API to consolidate all that SMS traffic to Twilio.

This approach to integration really made sense as it allowed for greater flexibility, greater speed, and we were achieving the reusability as we had hoped. As we look to stand up other use cases, the time spent considering all of the details of a vendor-specific connection is no longer needed. Naturally, we saw development costs and time to market reduce at around the same time as a new digital customer communication initiative was just starting.

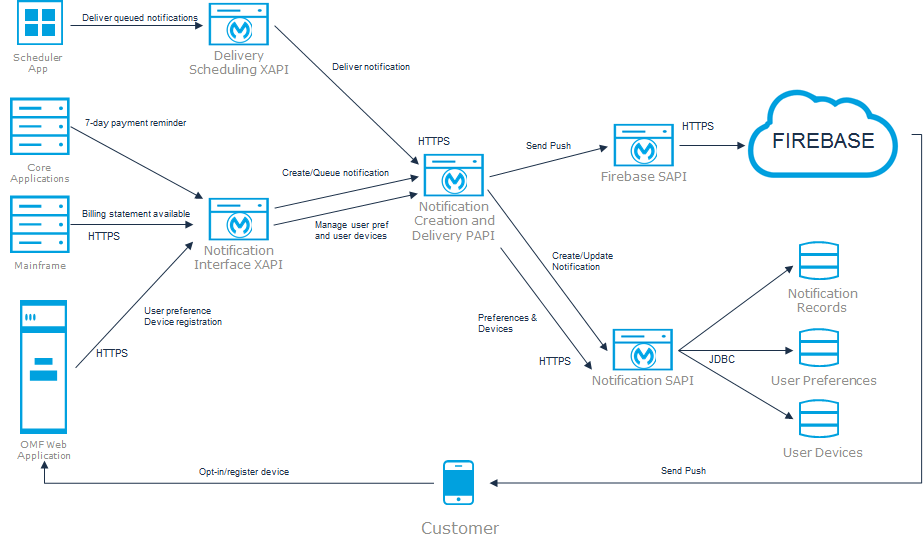

One major component in this new initiative was to begin sending mobile push notifications to our customers. Customers would have the ability to be notified of when their billing statement is available to view, and they would be reminded when a payment is due in seven days. Our messaging solution allowed for the delivery of messages at no cost to native mobile applications regardless of which type of phone the customer had. Internally, this challenged us with maintaining a database of customer preferences along with each of those customers’ Firebase tokens for their x number of devices. Thus, we had to create an API exposed to the front-end web application that would allow a user to maintain their opt-in preferences and register devices to their account.

This means our web application is a host of service layers acting as a proxy between the enterprise service bus and OneMain’s customer-facing web application. The push notifications themselves were actually generated in two different steps. This is because the trigger that creates the notification is coming from the core application or mainframe, but it’s actually orchestrated within our process layer API. So, first, the triggers are created and queued on demand by the consuming system, and then that consuming system specifies the customer identifier that’s supposed to receive that push.

Then, the API verifies the customer that the core system is wishing to send that push notification to is actually opted in to receive notifications, as well as if they have one or more available device tokens to which to send. This check is performed within the process layer using data we’ve retrieved in response to requests to a system layer API which is backed by a database. If the validation checks out, the customers opted-in will have a device token that queues the notifications within the system.

The delivery step is separate, so we utilize an external scheduler configured to kick off a process. That process retrieves and begins the delivery of all of the onset notifications for specific use cases. This provides business flexibility to decide when we want these notifications, do we want to engage customers for specific use cases, etc. When the process API is triggered, it starts delivering notifications and retrieves all of the unsent notifications of a certain type. It then provides these back to the client and the client can then request the delivery of those notifications either one at a time or together in batches. We can potentially reuse this in other business use cases.

Additionally, the database record created and the queue of the notifications is then patched to reflect the outcome of the transaction with Firebase. The advantage of this is that fail transactions must remain unsent and can be resent during the next scheduled interval. This is the point where we saw an opportunity to further exploit reusability at the process layer itself by developing a more flexible omnichannel notification framework where notification events themselves are independent of the channels on which they’re sent.

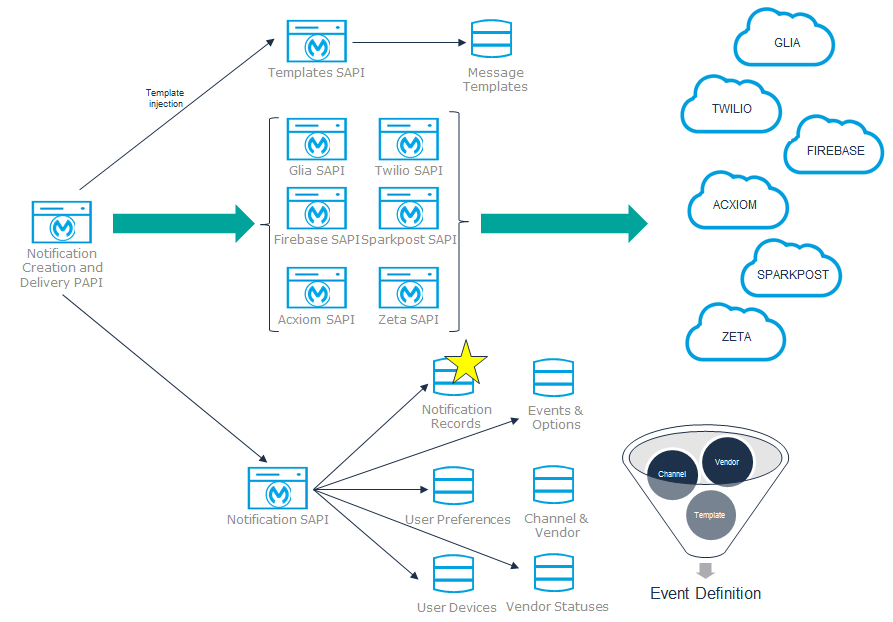

The stack of APIs implementing this process are built on top of a SQL database which is used for three different purposes. There are tables used for configuration so our notification events are defined first. For example, this could include our seven day payment reminder, credit score change alert, or delivery of the one-time passcode. All of these defined events are then associated with one or more different configurations that determine the channel such as SMS or email. This provides the consumer of the process API the flexibility to selectively target channels to send an event on one or multiple channels.

Moreover, customers have categories that are their OneMain perks such as financial tools, latest offers, and account offers. This framework allows us to define new events and then associate them to the different preference categories that appear within the mobile app. Customers can turn them on or off. We also now have flexible message templating systems that allows us to customize and parameterize the copy end-users receive. Rather than requiring the client system to define constructs and actually send the contents through the Mule API every time, the consumer only needs to send the pieces needed which are inserted into predefined templates associated with the specific event.

Alternatively, we might have a client trigger a separate process that begins at orchestration dynamically collecting the data needed to be inserted into a template. Here, the process API would directly trigger the event with the required piece of information, keeping the upstream client interface simple. Our SQL database also records a history of all outbound notifications allowing us to query specific events. Using this information, we can make informed decisions, troubleshoot, and have enhanced fault tolerance. This comes in handy particularly for semi-real-time or batch notifications where we can wait out any transient client system issues or downstream system issues and retry.

By reusing this process, we essentially created a configurable notifications framework where the customer engagements can be initiated in a variety of different clients and experiences. On top of this, we developed several experience APIs to listen and translate any of the data needed prior to invoking the process with other experience APIs consumed by our mobile and web applications. Our vendors only need to be concerned with providing the bare minimum of information to fire off an event to customers as well.

So, what are the benefits we’ve seen as a result? There are a few key ones:

- When consolidating these specific integrations onto Anypoint Platform, we designed them to be scalable.

- We created a vendor-specific treatment that’s delegated to system APIs as needed to encapsulate the logic and make it easier/quicker to react to changes without causing far-reaching impact.

- Almost all use cases with existing vendors only require configuration changes within the database, so no code changes whatsoever.

- Log aggregation takes place using our Elk stack so our production support team has been able to build out and stand up a robust suite of dashboards.

- More and more business processes use Anypoint Platform as the integration layer helping us capture more of our customer’s journeys on these dashboards.

- We can better detect anomalies when troubleshooting, meaning we’re more likely to proactively resolve issues before they adversely impact our business processes.

- Better able to tune the performance and optimize these processes as needed.

Overall, the implementation of MuleSoft and building an omnichannel framework for customer notifications has helped us meet and exceed our business needs. Additionally, this has set us up for the future as we look to expand our omni-channel customer communication integration framework.

Thank you to Erin and her team for sharing their story with us! For more customer stories such as theirs, register for an upcoming MuleSoft Meetup.