This is the second blog in a series explaining how MuleSoft’s Anypoint Platform can provide agile connectivity across monolith, SOA, and microservices architectures

In my last blog post, I discussed the impact agility has on business and IT, what it means for a business to be agile, and how implementing flexibility within your architecture enables business agility. This post will discuss the different architectures that have been prominent in previous years and how they’ve influenced the architecture of today.

Service-oriented architecture (SOA)

Over the last decade, there have been multiple flexibility-promising development and architecture models that have been created. Starting with object-oriented design (OOD) principles that promoted a new way for efficient (re)use of software assets and frameworks by combining data structures and operations/function into well-defined objects. Derived composition patterns and higher-level component models, gave way to service-based application development models – that while different to OOD in many respects – did promise to make application development easier and more efficient as well. Service-oriented architecture (SOA), being one of the most prominent models in this context, became a milestone for reusable, service-focused architectures.

At that time, SOA was a new, advanced architecture approach that allowed composing independent services into complex service compounds and applications without introducing tight coupling between the different service providers. Compared to the predominant monolithic architecture approach, distributed architecture patterns could be implemented with SOA, while still offering central and/or cross-service capabilities. This allowed the implementation of single atomic transactions or stateful processes that interact with multiple applications and services asynchronously.

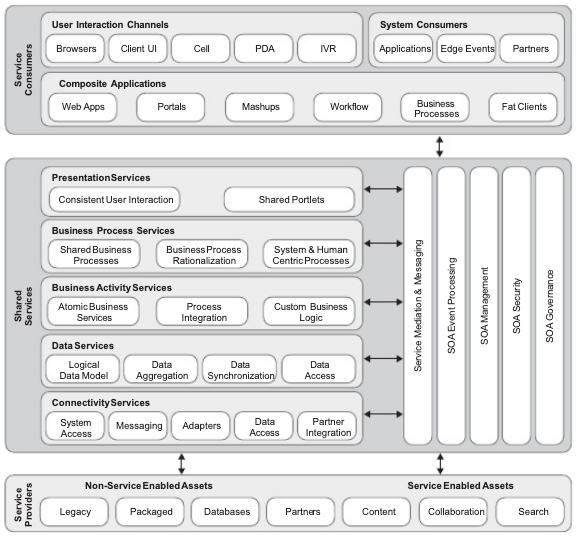

SOA introduced a logical service layering that categorized services concerning their core functional capabilities. These services ranged from low-level “connectivity services,” that acted as a bridge between the service and non-service-enabled world to business process and presentation services that composition of mid-level services like business and data services into complex applications. In order to do so, services had to be exposed via clearly defined interfaces, contracts, and usage agreements, much like we do today with APIs. The additional architecture innovation of SOA was to introduce utility services that allowed the externalization of certain standard functionalities, like routing, security, and monitoring and enabled the service designer to focus on business-related functionality.

So, in essence, SOA was a sophisticated architecture construct that introduced and implemented the architecture capabilities and principles we see in today’s modern architectures. So why did it fail?

The fall of SOA

The lack of success of the SOA model is multifaceted. First and foremost, it was very complex and IT-centric. SOAP/WSDL, canonical data models, and complex transformations made it nearly an art to build and maintain new services at scale. The multi-layer service model, which not only promised more flexibility but agility as well by providing fine-grained services that can be easily reused, brought with it new challenges as it introduced dependencies between associated services. Due to the SOA proclaimed loose coupling, which routed every request through a dedicated services bus, these dependencies were fortunately mainly on a semantical level, but significant effort went into governance and lifecycle management. As the effort associated with creating new services allowed limited productivity rates and long life cycles, the promised value quickly precipitated with the required effort needed to put in place the required governance model.

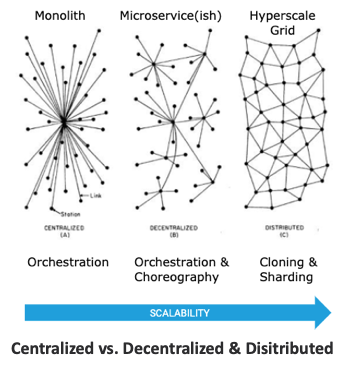

Another aspect was the, at least for today’s standards, a relatively centralized service bus approach. While, at the time, there were few use cases that had the potential to reach the scalability limits of such a construct, there are definitely use cases today that would be able to do this.

The microservices evolution

Microservices are seen by many as the evolution of SOA. It shares concepts like reusability and the desire to compose generic services into complex composites and applications.

According to Wikipedia, they are a “more concrete and modern interpretation of SOA used to build distributed software systems” and “like SOA, use technology-agnostic protocols.” Moreover, it is considered as a “first realization of SOA that has happened after the introduction of DevOps and is becoming the standard for building continuously deployed systems.”

Martin Fowler describes the main characteristics of microservices as follows:

- Componentization, the ability to replace parts of a system, comparing with stereo components where each piece can be replaced independently from the others.

- Organization around business capabilities instead of technology.

- Smart endpoints and dumb pipes, explicitly avoiding the use of an enterprise service bus (ESB).

- Decentralized data management with one database for each service instead of one database for a whole company.

- Infrastructure automation with continuous delivery being mandatory.

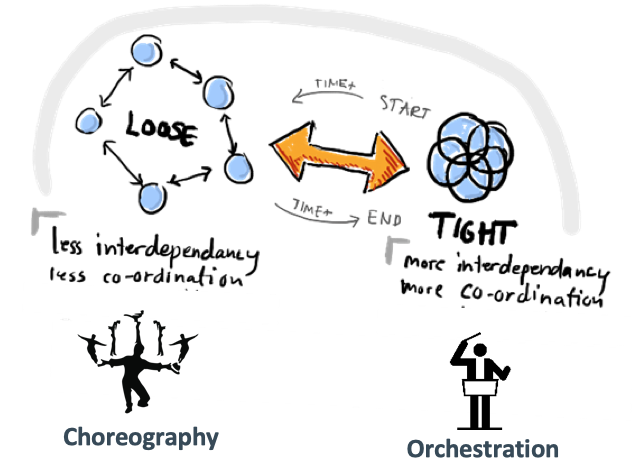

The most important and significant characteristic is the “smart endpoint and dumb pipe” approach that implies a couple of other characteristics. Besides the desire to be fully self-contained and the ability to be deployed independently, “orchestration” between services, like propagated in SOA, is seen as bad practice as it introduces dependencies and is seen as a major weakness of SOA. “Publish-subscribe” pattern-based “choreography” is the preferred way to communicate between services.

There is one very important aspect that SOA and microservices have in common, which is the mandatory definition of an interface/service-consumer-contract represented by and API.

The need for a services mesh

The idea of being completely self-contained and autonomous looks simple and straightforward and seems to be the ultimate answer to avoiding potential dependencies and resulting governance problems. Yet microservices practitioners learned that building service requires a lot of standard functionalities, like connection, routing, and security-related logic, that is not business-related but were needed to build a service network.

As the construct of a central service layer provides these types of capabilities as an SOA (e.g. a service bus), it was something that was against the highly distributed approach of microservices, something else needed to be designed. A service that provided the functionalities of the formerly known SOA utility services and implemented them in a distributed, scalable way.

The ability to scale, irrespective of whether from a technical or organizational point of view, has become one of the main drivers for the shift towards a microservices architecture. SOA, even pushing the concept of distributed applications, shows some clear limitations in this area, especially when the level of scalability exceeds a certain level. This is typically the case when we consider modern digital use cases like IoT, autonomous driving, or CX, that drive a high number of interactions/transactions across channels, devices, and services that are distributed across on-premise installations as well as public and hybrid cloud deployments.

Providing external non-functional services to these kinds of platforms without affecting their ability to scale requires a new architecture construct that goes beyond classic vertical service instances like a service bus.

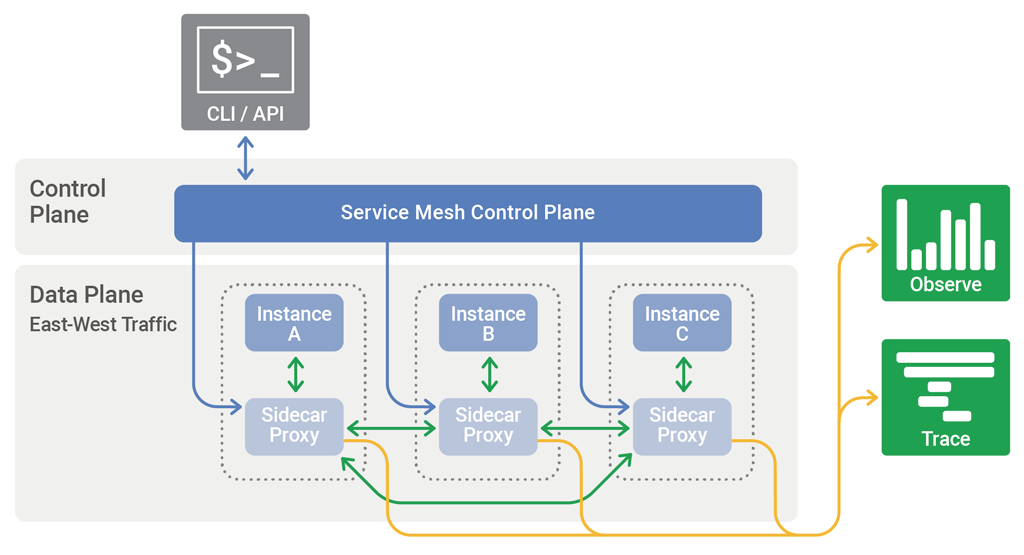

A typical service mesh architecture solves the problem by providing smaller, non-functional service units that are brought closer to the associated functional (micro)services instance.

Most of the “utility” components and functions such as service discovery, authentication/authorization mechanisms, container orchestration, load balancing, and implementation of patterns, like the circuit breaker, are typically provided as services on top of a container runtime provider (e.g. Kubernetes). But other runtime related services need special attention, as they require a closer relation to the service implementation itself and thus should be handled and configured separately. A sidecar model addresses this requirement by establishing a proxy that runs alongside a single service instance. It provides more sophisticated invocation context-adaptive routing, load balancing, security, monitoring, or error handling. The sidecar communicates with other sidecar proxies and is managed by a central orchestration framework. The following picture shows a typical example of such a setup:

Typical Service Mesh Architecture Setup (Source: nginx.com)

Istio and Envoy are technologies that have become flag bearers in this space. As these solutions are focusing on Layer 4 services only (Kubernetes), higher layer services, like the API Management are currently out of scope. This limits the possibilities of the sidecar concept and leaves room for other, complementary solutions.

In my next post, I will discuss best practices for when to use a service mesh enabled microservices architecture. For more information on picking the right architecture for your organization, download our whitepaper.