Brad Ringer, Principal Solution Engineer at AT&T, recently shared AT&T’s journey implementing MuleSoft Runtime Fabric and the benefits it’s seen as a result. He recently shared this story during MuleSoft CONNECT:Now Americas.

As a Principal Solution Engineer at AT&T, my role includes being the Lead Integration Architect of the Business Sales Salesforce Platform at AT&T. In this blog, I am going to tell a story that covers our Business Salesforce Platform, how we built our MVP integration solution, how we ended up with Runtime Fabric including the different options we went through, and lastly a few takeaways.

What is our Business Sales CRM Platform?

The Business Sales CRM Platform is a platform we built on Salesforce that supports all of our business sales, especially our B2B sales. We’ve moved our solution out of a legacy solution called Siebel which was a monolith, and we’re currently using our CRM platform for opportunity and sales funnel tracking. With this new implementation, we’re focusing on best practices. We had a legacy system in the past that had 10-15 years of projects and solutions built on it so one of our goals was moving away from that into building an extensible, standard, best process-based solution with a maintainable architecture.

At the heart of our new Salesforce implementation is MuleSoft. MuleSoft is our integration layer as well as really anything in or out of Salesforce, similar to an Ingress and Egress layer. There are packages and related tools that we’re using from an integration perspective, but to complete any APIs, data, or batch processes we’re using MuleSoft.

One of the big questions everyone has with these SaaS platforms is “ how do we access Salesforce, MuleSoft, and our company internal services whether it’s microservices, API gateways, or any legacy systems like Siebel or SAP, over the network and cross the fine line of the firewall.”

How do we cross firewall security?

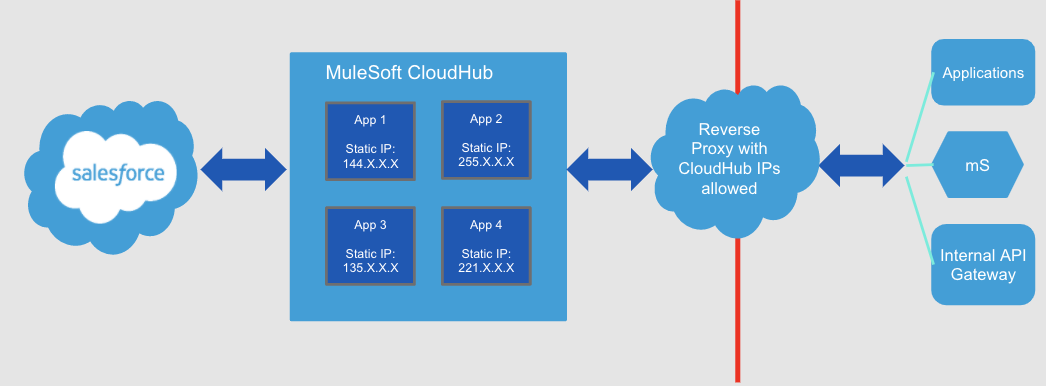

The first step was creating our minimally viable product. We had three months to go from ideation to implementation. We had to do a “skunkworks deployment” where we needed to get something out the door and this was the easiest way to do so. What we did was leverage CloudHub. As part of this setup, we set static IPs for all of our applications and created a reverse proxy to cross the firewall with those static IPS whitelisted to access those internal applications.

Now we needed to execute phase two. We knew that our phase one MVP solution wasn’t going to work, so we needed to figure out a solution enabling us to move forward. With this solution, everything required a static IP. How can you handle dev tests, production, even unit testing across multiple applications? With this solution we needed a static IP for each of these and then worked with each team that managed the reverse proxy for each of these static IPS. It grew exponentially. We knew that wasn’t a workable solution moving forward.

The next steps we took was working closely with our MuleSoft success team including Ashwin Krishnan, Giles Ramone, and our MuleSoft Account manager David Bryne. They helped us look at some options to build a platform for us to move forward. Our success team guided us through a few solutions.

Solution one: CloudHub options

The first solution was CloudHub. It’s the iPaaS solution with built-in infrastructure. All of the maintenance, all of the difficult things we would need to do to maintain an infrastructure such as having to maintain servers, do all of the patching — CloudHub does this for us.

First, we looked at an IP Sec VPN, meaning we would build a VPC in CloudHub and have an IP Sec VPN access that. The problem we ran into was we needed to have a CIDR block of IP Sec VPN for IP addresses that we could route. Unfortunately given restrictions on IP V4 addresses, that wasn’t really an option. Another option was VPC peering which means connecting two VP networks. We didn’t really like this solution because we’d have a MuleSoft account and an AT&T account that would be joined together which reduced our control over what’s going on between the network. The third option was Direct Connect which is probably an option we’re looking for in the future.

Solution two: On-premises option

The other solution we considered was on-premises. Everybody at least one time has done a Mule implementation on a bare metal server or VM or other systems you have in place. So, could we make this solution work on our VMs that we have inside our network? The answer was yes — from an implementation perspective — it would be no problem. We have servers, we could build an HTTP load balancer in front of it, and make this solution viable. The problem again was the firewall. How do we get across the hard block we have to pass that connectivity? What we looked at was creating a reverse proxy to cross the firewall. However, if we used the same solution we did initially in the MVP solution with the whitelisting, we would have to look at every Salesforce IP address we could possibly use and see if we can cross that same reverse proxy. The amount of IPs and whitelisting required didn’t make sense.

Final solution: Runtime Fabric

Thus, we ended up with Runtime Fabric. So, what is Runtime Fabric? I look at it as a software appliance. Think of it as an appliance you install in your kitchen, but from a software perspective. Whatever platform you have, AWS, Google Cloud, On-Prem, Azure, you basically take the appliance and install it on your own implementation, the PaaS system behind the scenes. This is kind of what we did. Runtime Fabric as a whole is Kubernetes based, it’s a container service. It really automates the deployment and orchestration from your Anypoint Platform control plane.

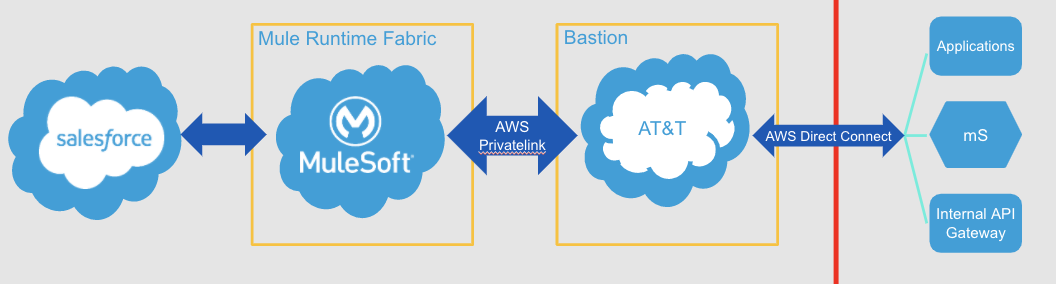

Additionally, this solution lets us take the best of MuleSoft and the best of AWS — such as SQS, S3 — and all of the other standard functionality that AWS provides. On top of this, the secured connectivity inside the network such as PrivateLink, AWS Direct Connect. As seen in the image below, it is a three-layer cake of implementation. We have the base layer that everything is built on which is AWS, then we have the appliance that MuleSoft provides us that plugs into the AWS implementation. You can see our pure Runtime Fabric layer depicted in the first box. The second piece of this is we have a standalone AWS account for our application so how do we connect that with the network?

As you can see in the image above, what we’ve done at AT&T is build out what we call a network zone or bastion VPC. The middle box crossing the firewall is where we built out a proxy with a Direct Connect connection both inside and crossing the firewall. Lastly, we use AWS PrivateLink to go from our application Runtime Fabric implementation.

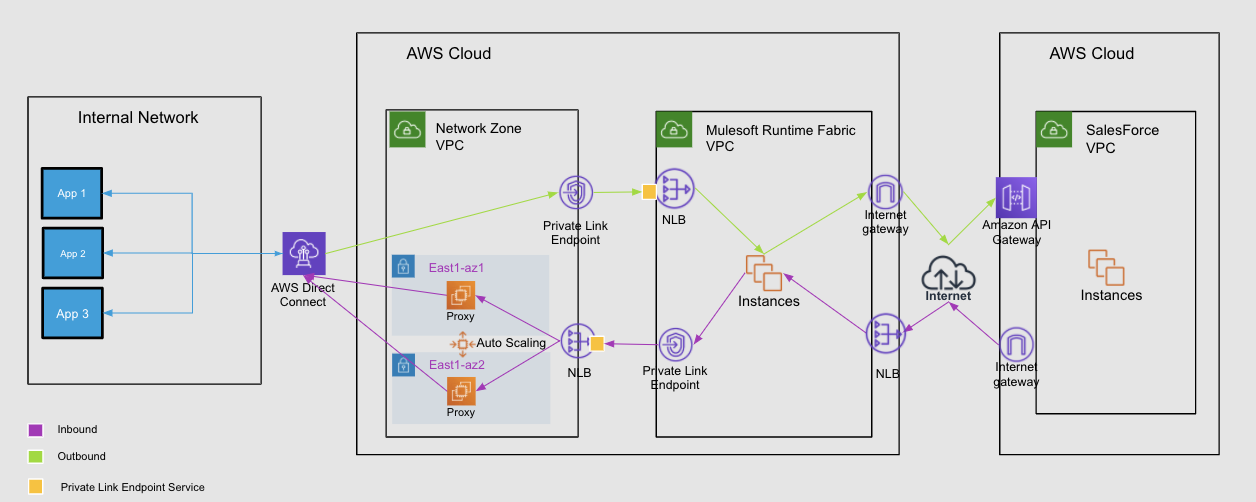

Solution network topology

Below is an overview from a networking perspective of how this looks:

We have a bidirectional flow that is right to left versus left to right. From an internal network perspective, if we wanted to call a Salesforce API, if we wanted to get an opportunity or lead, from Salesforce then we’d call using a transparent layer from inside the network. For example, you call an URL, FQDN, it then traverses over the whole layer of the PrivateLink to Salesforce and returns that data to you. The same thing happens in the reverse. From Salesforce, you can call a network load balancer that goes to the Mule layer and then crosses over PrivateLinks all the way from Direct Connect to whatever application within the network you are trying to access.

We covered how we did this, so let’s tackle the next big question: how hard was this?

Setting up MuleSoft Runtime Fabric on AWS Cloud

Given all of the excellent documentation MuleSoft provides and the terraform scripts, it took a little longer for our dev test, but once we had all of the steps down, it took us 15 minutes to build out the entire production environment. This included some manual steps to set up the load balancer as well as the PrivateLink connection. It only took a standard AWS tooling and security to set this up with a terraform script we ran from our desktop. Fifteen minutes and easy as pie. This has been one thing that has really progressed over the lifecycle of the Runtime Fabric product is the tooling and implementation and logging and stability have improved greatly over the last year.

So does this solution work and meet our needs? Yes! We’ve implemented a solution that really meets our requirements to provide that connectivity in and out of the internal network to Salesforce as well as vice versa. We have a reusable pattern that is being used across the enterprise including multiple teams across AT&T using this same pattern. It’s extensible for our future needs. As we expand, we can add additional workers if needed (scaling horizontally) or if we need to increase JBMP we can scale vertically and increase those by increasing server sizes, such as going from a large to an extra large.

The implementation and how well MuleSoft engineering has done on this product is exemplary. We went from a difficult implementation to start with to a stable platform. One of the things we are really excited about is Salesforce Private Connect. It’s Saleforce’s implementation of the same pattern we are currently doing with the AWS account, but it will provide a totally private connection. Think of it as rather than going over the internet and then back to AWS and then over the private link, you can now have a private network connection all the way from Salesforce to our AT&T internal networks. Our security teams are super excited about this because there won’t be any internet transversal at all since everything is over that private connection.

Key takeaways:

As a quick recap, this blog has covered an overview of the different patterns we used to access internal assets across a firewall. I also discussed how Runtime Fabric has provided an extensible appliance we can use to leverage AWS best practices and patterns which really utilize their tooling. It is great to have the best of both worlds with Runtime Fabric and AWS. We have the cloud that we can manage ourselves and all of the tooling with the MuleSoft appliance we can just plugin. You can also find more information in the recording of my presentation below.

If you have any questions please reach out to Brad on LinkedIn.