A little while ago we decided that it was time to include the option of asynchronous loggers in Mule ESB.

The asynchronous implementation is faster than the synchronous one because the process waits for IO on a different thread, instead of on the main execution thread, allowing it to continue its execution.

After some research, we decided to use LogBack or Log4j2, both are successors of the Log4j framework.

To choose between the two, we ran performance tests in our performance lab comparing Log4j, Logj2, LogBack and no-logging. The following Mule app was created and used in all the tests.

At each flow execution, the HTTP request gets an iterator from a java component which logs N times the same payload generated at the variable component. Inside the for each block, the variable is then copied into the payload and written to the file by the Logger.

The flow is created this way to enable multiple threads to log concurrently. This behavior is needed in order to stress the disk IO properly without being bottlenecked by the HTTP component or something else.

This app was tested on mule 3.5.1 for the log4j1 case, two vamped 3.6.0 snapshots with LogBack and Log4j2 underlaying implementations built by Mariano Gonzalez (who was the engineer came up with idea) as a POC.

Let’s check out the results:

We can see how the throughput of the application behaves. As the Concurrency increases, the throughput saturates and stabilize as it becomes latency bound by either the application execution, or disk IO latency. Below we have identified the three cases:

- Log4j2 and NoLogging: Application bound. This is obvious when there is no logging. When Log4j2 is used, it logs asynchronously and therefore, its throughput is almost as good as the application without logging.

- Log4j1: Disk IO bound. At low concurrency, the disk IO is fast enough to serve the requests but as the concurrency increases, disk IO becomes the bottleneck and the process throughput is limited by the latency of the disk IO.

- LogBack: Similar to Log4j1, the velocity degrades steeply, but the reason isn’t exactly the same as Log4j. From application profiling, we found that the usage of the inner queues to schedule the asynchronous logs causes a lot of Garbage Collecting, if you want to know more about why this happens, you can look into their mailing list.

The clear winner of this bakeoff tests is Log4j2 which uses the LMAX Disruptor to handle concurrency. Thanks to its circular buffer, it provides better scalability, latency, concurrency overhead and throughput than the other options.

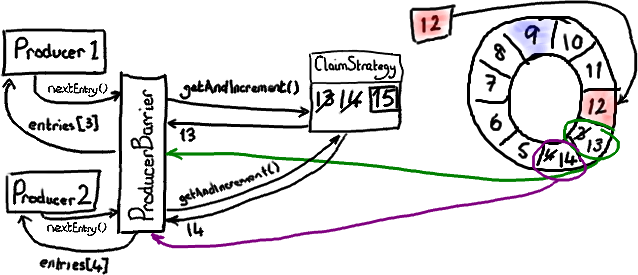

This is a simple sketch of how the LMAX Disruptor woks:

At the heart of the disruptor mechanism sits a pre-allocated bounded data structure in the form of a ring-buffer. Data is added to the ring buffer through one or more producers and processed by one or more consumers.

Technical source: http://disruptor.googlecode.com/files/Disruptor-1.0.pdf

This upgrade is available on Mule 3.6 to know more about how to configure and migrate from older versions you can read our documentation and Mariano’s blog post on Asynchronous Logging in Mule 3.6.

Hope this was helpful! If you have any questions or advice, please feel free to comment below!

Thanks for your time!

Ready to get started? Download Mule ESB now »