In his “To ESB or not to ESB” series of post, Ross Mason has identified four common architectures for which Mule is a great fit: ESB, Hub’n’Spoke, API/Service Layer and Grid Processing. In this post we are going to detail an example for the latter architecture. We will use Mule to build a scalable image resizing service.

Here is the overall architecture of what we intend to build:

As you can see, we allow end users to upload images to a set of load balanced Mule instances. Behind the scene, we rely on Amazon S3 for storing images waiting to be resized and Amazon SQS as the queuing mechanism for the pending resizing jobs. We integrate with any SMTP server for sending the resized image back to the end user’s email box. As the number of users will grow, we will grow the processing grid simply by adding more and more Mule instances: indeed, each instance is configured exactly the same way so the solution can easily be scaled horizontally.

Read on to discover how easy Mule makes the construction of such a processing grid…

[ The complete application is available on GitHub. In this post, we will detail and comment some aspects of it. ]

There are two main flows in the application we are building:

- Job Creation – where an image can be uploaded and a resize request created,

- Job Worker – where the image resizing is done and the result emailed back to the user.

There is another ancillary flow that kicks in before these two: it’s the flow that takes care of serving the image upload form. Let’s start by looking at it.

Submission Form

Mule is capable of service static content over HTTP, using a message processor called the static resource handler.

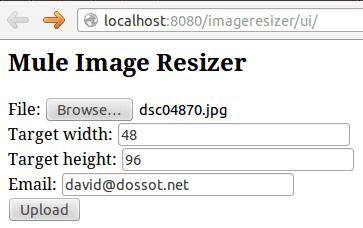

With the above flow, we’re able to serve a basic HTML form that can be used in a browser in order to upload an image and provide information about the desired size and email address for receiving the result.

This form submits data to Mule using the multipart/form-data encoding type. Let’s know look at the flow that takes care of receiving these submissions.

Job Creation

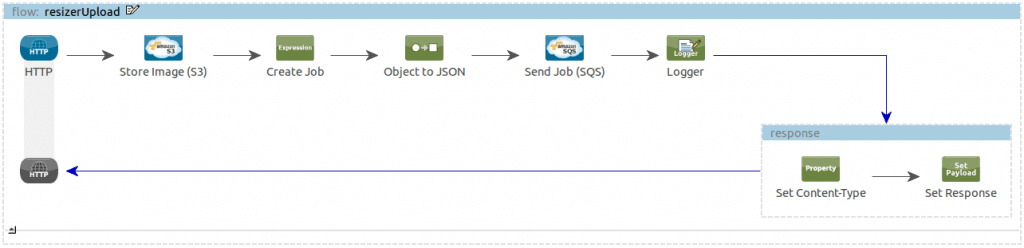

The flow below shows what happens when a user uploads an image to Mule:

What happens in this flow consists of the following:

- The

multipart/form-dataencoded HTTP request is received by an HTTP inbound endpoint, - The image is persisted in S3,

- The job meta-data (unique ID, target size, user email, image type) is stored in a JSON object and sent over SQS,

- The success of the operation is logged (including the ID for traceability),

- A simple textual content is assembled and returned to the caller: it will simply be displayed in the browser of the user.

When this flow is done, it is up to a worker to pick-up the message from SQS and perform the actual image resizing.

Job Worker

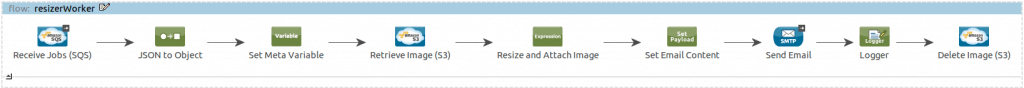

The worker flow is the longest one:

In this flow, the following happens:

- The job meta data is received from SQS, deserialized to a

java.util.Mapand stored in a flow variable for ulterior access, - The source image is retrieved from S3,

- It is then resized and the result is attached to the current message, which will automatically be used as an email attachment,

- A simple confirmation text is set as the message payload, which will be used as the email content,

- The email is sent over SMTP,

- The success of the operation is recorded,

- And finally the source image is deleted from S3.

We record success before deleting the image from S3 because, as far as the end user is concerned, at this point we are done and have successfully performed our task.

Note that SQS guarantees that message delivery occurs at least once. This means that it’s possible that a similar job gets delivered more than once. In our particular case, this would mean that we would try to perform the resize several times, potentially not finding the deleted image or, if finding it, sending the resized image several time. This is not tragic so we can live with that but, if that would be a problem, we would simply add an idempotent filter right after the SQS message source, using the unique job ID as the unique message ID in the filter.

Conclusion

As detailed by Ross in the aforementioned series of posts, Mule’s reach extends way beyond pure integration. We’ve seen in this post that Mule provides a wide range of primitives that can be used to build processing grids.

Have you built such a Mule-powered processing grid? If yes, please share the details in the comment section of this post!