There are several steps that go into updating a CloudHub-hosted runtime engine, including security updates and data patching that are applied directly to the runtime engine. When it comes to patch and minor version updates, these must be applied to the runtimes by the application owner. These updates require regression testing of the applications, but seldom require changes to application code.

However, major version updates — such as Mule 3.9.4 to Mule 4.3.0 — require careful planning, code rewrite, and extensive testing of the application. This article will share Air Canada’s journey in updating these major version updates for its CloudHub-hosted Mule applications that implemented core reservations and departure control APIs.

Why migrate from Mule 3.x to Mule 4.x

Some of the reasons to upgrade from Mule 3.x to Mule 4.x. include application maintenance simplicity, extensibility, and performance. We highlighted the specific reasons that prompted Air Canada to kick start its migration project:

1. Get ahead of “end of support” for Mule 3.9

Even though the end of extended support for Mule 3.9 was three to four years away, it was prudent to get started on the Mule 3.x to 4.x migration journey soon to reduce competition for user story prioritization, resources, and release cycles in competition with business initiatives.

2. Minimize technical debt

Influx of work due to the cost-saving and digital transformation initiatives meant extensive enhancements to our APIs. Therefore, by performing the Mule 3.x to 4.x migration sooner rather than later, we could minimize the buildup of technical debt and rework.

3. Performance and efficiency

Another reason was to take advantage of the improved performance and efficiency of the connectors and run time in Mule 4.x compared to Mule 3.x. This would implicitly minimize the resource requirements of the migrated applications, lowering the total cost of ownership (TCO).

4. Administration and maintenance

In addition to reservation and departure control APIs, Air Canada has other application portfolios (e.g. loyalty, cargo, fuel management, operations) implemented on MuleSoft CloudHub that were built later. Most of these applications were already on Mule 4.x. Migrating the older APIs to Mule 4.x makes runtime consistent across all application portfolios and improves administration efficiency and maintainability.

Migration Approach

At a high level, two migration approaches were considered:

- Big bang: All applications are migrated at once

- Progressive: Application(s) are migrated in smaller groups

Our application portfolio consisted of a number of application groups, so a big-bang migration of all the application groups would’ve been a challenge. Given the varying business criticality and dynamic demand for functional changes across these application groups, we chose a progressive approach based on application groups that had lower business changes.

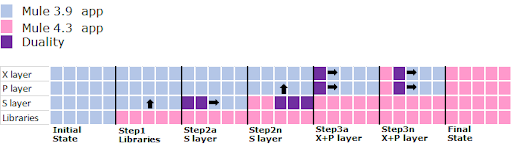

We considered two methods of the progressive migration approach:

- Migrate an application group (experience, process, and system layer applications) simultaneously.

- Migrate common libraries and system layer applications first, then experience layer applications, followed by process layer applications.

We selected the second option since it provided granularity and flexibility when it came to choosing which apps to migrate.

Estimated effort

For the migration, a custom estimation template was created which calculates overall effort based on the following factors:

- Number of Mule configuration files (XML) and the number of elements in each of these files

- Custom configurations/connectors to be migrated

- Migration of common libraries

- Custom Java code to be migrated

- Total DWL files and their complexity based on number of lines and business/transformation logic

- MUnit migration

- Code optimization and redesign

Mule Migration Assistant (MMA) was extensively used for the migration. In fact, all the artifacts were first migrated using the MMA tool and later individual components were verified for accuracy. Manual code change was completed as necessary to finalize the migration. We saved approximately 20% in overall migration time for our applications using MMA.

Staffing

The reservations and departure control application portfolio consists of eight application groups. Each application group is related to an individual functional domain (e.g. seat maps, shopping, booking). Following MuleSoft’s best practices, an application group consists of three applications, x-API, p-API, and s-API. There is a set of common libraries that implement common utility functions, error handling, etc. These libraries are shared by all application groups. The eight application groups are assigned across three pods (development teams). Some of the key staffing decisions that we made:

- We chose to use the simplest application groups with minimal dependency on common libraries as a pilot. The pilot was launched to gain early insight about the MMA tool, identify the cutover preparatory steps, and implement the canary policy for failover. The pilot team was staffed with the most experienced architect and a senior developer on the team, as this was a critical exploratory phase.

- Once the pilot was successfully completed, a core migration project team was created with an architect and four developers drawn from the three pods, with skills ranging from foundation to intermediate to experienced. This team was responsible for migrating the common libraries, as well as all system-layer and experience-layer applications.

- During the migration of process-layer applications — which were the heaviest in terms of functional complexity — a senior developer who is the subject matter expert from the respective application pod would collaborate with the core migration project team.

- Functional and performance testing was done by a separate dedicated test team using a combination of automated and manual regression test suites. Test team always consisted of the same two resources.

This staffing approach ensured that migration work progressed in the most efficient and fastest manner possible because the core migration team became very adept at the migration tasks. This also allowed us to reduce impact on velocity of change during business-critical projects delivered by the three application pods.

Planning the cutover

Several approaches for cutting over from Mule 3.x to Mule 4.x applications were considered for the roll-out strategy:

- Big bang: This approach involves cutting over 100% of the traffic to the required version of the application. This approach has two options:

- a) Update load balancer rules to route traffic to the Mule 4.x application.

- b) Install Mule 4 application with the same name as the Mule 3.x application, so that the Mule 3 application is overwritten.

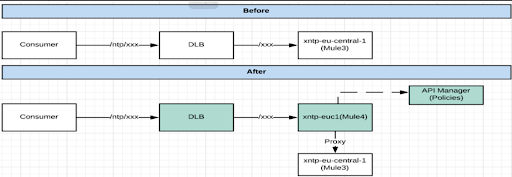

- Phased cutover: This approach involves cutting over traffic from one version of the app to the other in a phased and controlled manner so that the performance of the new Mule 4.x application can be observed.

- a) Implement the phased routing of traffic using a custom-built proxy application.

- b) Implement a Mule 4.x policy for canary deployment.

- Control traffic routing external to MuleSoft: This approach would route the gateway components external to the Cloudhub implementation to be used to control routing of traffic from one version of the application to the other.

Option 2b was chosen because:

- It gives better and granular control over the entire cut-over activity.

- Rollout is controlled via Mule API Manager.

- Only minimal one-time load balancer change is involved.

- Minimal clean up steps involved post-rollout.

Cutover preparatory tasks

Some of the preparatory tasks for cutting over each Mule 4.x application are listed below:

- Soft deployment of the Mule 4.x application: This should be done a few days ahead of time for business cutover. This is necessary to perform some of the other preparatory configuration tasks as well as sanity and connectivity testing.

- Copying API contracts: The Mule 4 API instance needs to be created in API Manager. The API contracts from the Mule 3.x instance must be copied over to the Mule 4 API instance. This can be scripted for efficiency and to avoid errors.

- Configure the canary policy: This will be set to 0% initially so that all traffic will be routed to Mule 3 application once the traffic starts flowing.

- Confirm runtime configuration parameters: If higher layer application configuration needs to be updated to route traffic to the Mule 4 application endpoint and to identify the configuration details.

- Sanity/connectivity testing for Mule 4 application: Using Postman or another test tooling, test the Mule 4 application directly to ensure it is configured correctly and it can establish connectivity to its backend services/APIs. Obviously, read-only APIs must be used for this purpose as this is a production environment.

Planning vCores

A key aspect of the phased cut-over strategy is that both the Mule 3.x application and the Mule 4.x application need running in the production environment for a period of time until the cutover is complete and the Mule 3 application can be shutdown and decommissioned.

The period of co-existence for both the applications could vary from a few days to a few weeks. The vCores consumption would be double the usual amount during this period.

Depending on your licensing terms with MuleSoft, you need to carefully factor this into the cut over planning to ensure you don’t exhaust your total allocated vCores. For our cut over, we made sure at least 20% of our total vCores remained available in case of higher volumes of production traffic and other scalability concerns.

Lessons learned

After completing our Mule migration, there were a few key lessons we learned based on our successes and errors. We provide more detail on each below.

- Expect the best, plan for the worst. As per our original plan, the migration of the eight application groups were expected to be completed in ~11 months by a fully dedicated team. However, interruptions and delays creep in. The only way to plan for the worst is to keep your plan agile enough and replan constantly considering the variables at play throughout the delivery phase. This also highlights why it is important to plan the migration as early as possible considering the hard end date for the extended support for your older MuleSoft version.

- There are Mule 4 optimizations that must be considered to take advantage of performance improvements from the migration. In some cases, deeper remediation of flows and connectors is necessary with an understanding of Mule 4 differences.

- Performance and longevity testing is a big part of the migration process. In our applications, certain payloads caused different behavior that required specific remediation to operate in the Mule 4 runtime. For instance, for some edge cases, the Mule 4 version of the WSC (Web Service Consumer) connector was less performing than the Mule 3 version. We overcame this by creating a custom WSC connector by extending the Mule 4 HTTP connector.

- Even the best functional and performance testing may not fully represent production behavior. It is necessary to closely observe the application in production for a couple of weeks after cut-over and take quick remediatory actions if needed, such as routing traffic to the Mule 3 application instance using the Canary policy or tweak vCore/worker configuration to address runtime performance variations.

- Some of the observations during the code migration:

- Authentication result structure has changed in Mule 4.x. Previously, it was passed in a “_clientName” variable. Now, it is passed within an authentication structure.

- RAML parsing is stricter in Mule 4.x.

- Default values are not set by API Kit Router — this is important because in Mule3 some headers are replaced by default values if not set.

- There is a difference in the way dw2 treats empty XML elements. dw2 treats them as null and those fields get removed from the final JSON. dw1 treats them as empty strings and it appears in the response. (left if Mule 3, right is Mule 4)

- DataWeave reader properties must be used to support backward compatible behavior.

- Some Anypoint Studio issues that were observed:

- Unsaved DataWeave changes are lost when switching tabs.

- Random issues with classpath can be resolved by re-building projects.

- Studio is unable to reference dwl files from libraries based on classpath, however it works when running the application.

Summary

Mule 3.x to 4.x migration is complex and there is no silver bullet to accomplish the same. You must carefully consider your application network, the frequency of changes to the applications, the impending pipeline driven by business priority and make migration approach and staffing strategy decisions accordingly. Having a robust cutover plan along with a predefined set of preparatory tasks is also critical to successful migration. Lastly, though it may sound cliche, the importance of having an agile and nimble project plan that can be continually replanned, cannot be overemphasized.

The end result was that we had an up-to-date, well-performing, and maintainable integration platform based on Mule 4. This is certainly worthwhile for an enterprise of any size and complexity.

Learn more about the Mule Migration Assistant (MMA).

Additional authors: Suhail Muhammad, Charnjit Gill, Sudhish Sikhamani