Mule Clustering is the easiest way to transparently synchronize state across Mule applications in a single data center. Mule Clustering, however, assumes that the Mule nodes are “close” to each other , typically on the same network, in terms of network topology. This allows Mule applications to be developed independently from the underlying cluster technology and not to explicitly account for scenarios like network latency or cluster partitioning.

These assumptions aren’t as sound when dealing with multi data center deployments. Unless you’re lucky enough to have fast and reliable interconnects between your DC’s you need to start accounting for latency between datacenters, the remote data center going offline, etc. In such situations the choice of a data synchronization mechanism becomes paramount.

The Cassandra Object Store

Mule’s state storage and synchronization features are implemented via object-stores. object-stores provide a generic mechanism to store data. Message processors like the idempotent-message-filter and until-successful router use object-stores under the covers to maintain their state. Typically object stores are transparent to the Mule application and Mule will pick the appropriate default object store based on the environment the application is running in.

When considering how to synchronize state across data centers with Mule applications, however, you should consider using an object-store implementation that can handle network latency and partitioning. Version 1.1 of the Mule Cassandra Module introduces preliminary support for such an object-store.

Configuring the CassandraDBObjectStore

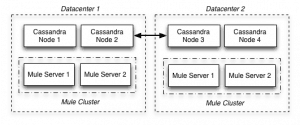

Apache Cassandra is a column-based, distributed database that is architected for multi data center deployments. The Cassandra Module’s “CassandraDBObjectStore” lets you use Cassandra to replicate object store state across data centers. Let’s consider the following topology for an imaginary Mule application that needs to distribute the state of an idempotent-message-filter across DC’s:

In this topology we have 2 independent Mule Clusters in each data center. We also have Cassandra deployed across data centers in a cluster. Our Mule application will use the default Object Store for all use cases except for the flow we want to apply the idempotent-message-filter on. For this case we’ll use the object-store provided by the Cassandra Module. Let’s see how this looks.

We start off by wiring up the Spring Bean to define the CassandraDBObjectStoreReference. In this case we set the host to cassandrab.acmesoft.com, the address of a loadbalancer that will round-robin requests to each Cassandra node local to the Mule Cluster’s datacenter. We’ve dedicated a keyspace for this application and called it “MuleState”. Since we want to ensure the same message can’t be processed in either data center we’ve set the consistencyLevel of the Object Store to “ALL”. This will ensure the row is written to all replicas.

The CassandraDBObjectStore is “paritionable” meaning that it can split the object storage up intelligently. In this case we’re setting the partition name to be dynamic using a MEL expression to evaluate the current date (the implementation currently creates a ColumnFamily for each partition.)

The flow accepts HTTP payloads on the given addresses and uses the message’s payload as the mechanism for idempotency. If the message isn’t stored in the ColumnFamily corresponding to the partition then the message is passed to the VM queue for further processing, otherwise its blocked. The ALL consistency level will ensure this state is synchronized across clusters in each data center.

Wrapping Up

Cassandra’s support for the storage of native byte arrays and its multi-data center aware design make it a good choice as an object-store implementation. Its support for multiple consistency levels is also very useful, allowing you to relax the synchronization requirements depending on your use case. You could, for instance, set the consistencyLevel of the object-store to “LOCAL_QUORUM” to ensure state is synchronized in only the local data center, which replication to the other DC potentially happening later, as a trade off for increased performance.

Other potential good fits for the Cassandra Module’s object-store are for the until-successful and request-response message processors, both of which require shared state.