Logging is useful for monitoring and troubleshooting your Mule applications and the Mule server — whether that’s recording errors raised by the application or specific details, such as status notifications, custom metadata, and payloads.

In this blog, I shall introduce you to a few options when looking to extend out-of-the-box logging functionality. For example, to match your company’s log file format standards or compliance rules. I will show you which files to configure and the implications of any changes.

In our ‘Anypoint Platform Development: Fundamentals’ course, we introduce you to the default log4j2.xml configuration file. The file gets a cursory mention; as we peruse the topology of a default Mule 4 project. In the classroom, we allude that the default logging functionality can be altered by modifying this file – and that is pretty much it!

So let’s explore the logging capability and examine some of the values that can be configured, and the implications such changes will incur.

Why configure the log4j2 file?

Occasionally, when running the Development Fundamentals course, students ask:

“How do we modify the properties of the output log files?”

“Our internal logging system recognizes different field names. Can we change how the logged priority levels are output?”

“How do we change the output data model of the log generator? It needs to be consumed by a third-party log management solution.”

So we now have a few reasons why you might want to configure the logging. We will inspect how to do it, presently.

What is configured out-of-the-box?

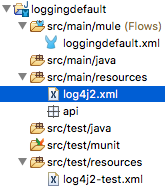

In a default Mule 4 project, located in the src/main/resources folder, there is a file called log4j2.xml (see figure 1 below). The naming and location of this file is significant, as Mule 4 projects have a Maven-based foundation.

For those who haven’t worked with Maven, it is a build automation tool for Java projects. It uses conventions to standardize the way software projects are built and manages the project’s dependencies. Therefore, the src/main/resources folder structure and naming convention are determined by Maven’s Standard Directory Layout. These standards dictate much of the structuring of a Mule 4 project, beyond just the location of the log configuration file.

The log4j2.xml file is also based on a standard that is not Mule-specific. It is a Java logging framework provided by the Apache logging services in the Apache Log4j subproject and is the preferred logging framework. This is largely because Mule applications are built on the Mule runtime which is developed in the Java programming language.

Note: In the src/test/resources folder, there is a file called log4j2-test.xml. This file can be modified in the same way, but I won’t be addressing this as it is only referenced when running MUnit tests. However, all that you learn in this blog is also applicable to its configuration.

Figure 1: The location of the highlighted log4j2.xml file that we will inspect and modify in this blog.

The default log4j2.xml file

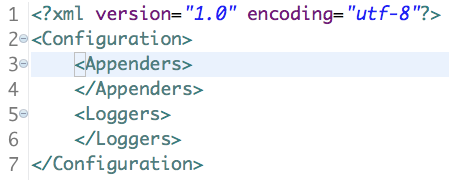

Rather than displaying the default contents of the complete log4j2.xml file here, let’s split it into its two sections; Appenders and Loggers.

Loggers

Let’s first look at the configuration of the Loggers element.

Loggers can be configured to determine what specific packages are to be logged — and to what depth — where packages are a grouped set of classes and interfaces, represented by a namespace.

Collectively, packages are the foundation of the logic for your Mule applications. For example, you might choose to record everything from the DEBUG level upwards for a specific package, while the default configuration for all other packages might be to record only events from the ERROR level upwards.

The levels are defined in terms of severity of the loggable event and range in value from FATAL (most severe) to ALL (least severe). The amount of log data output varies with severity, thus the FATAL level outputs the least amount of data while ALL logs the most. Visit the Apache Class Level documentation for a more thorough understanding of the hierarchy of events that can be logged.

Meanwhile, back in a Mule 4 project’s default log4j2.xml file configuration, one of the main considerations for the Loggers section is to choose whether to log your events synchronously or asynchronously. It’s recommended to select asynchronous logging in production to improve the application’s performance, i.e. low latency.

In the above code snippet, notice the AppenderRef element and its ref value “file.” This references the name element value from the appender, and thus links an appender configuration (discussed next) to the logger configuration, i.e. it instructs upon the logger the log’s output destination, format, and its configuration.

Inspect the official Apache documentation, for more information.

Appenders

Appenders essentially describe how to deliver the logging events to a destination. Think about where you typically see log events outputted from your Mule applications — the Console view in Anypoint Studio and the log files of the application itself — both of these are examples of Appenders.

Note: The appender that generates the log output for Anypoint Studio’s Console view is specified in a log4j2.xml file located in the application’s conf folder. I will not be modifying that log’s configuration in this blog.

Let’s peruse the default configuration of the Appenders section:

As you can see, there is only one pre-configured child element in the Appenders element: RollingFile.

The RollingFile appender ensures that all log events are routed to a specified file, but there are alternative options, such as writing events to a relational database, submitting messages to topics for Kafka integration, or over SMTP. Read about the numerous alternative options to RollingFile, here.

Some interesting attributes and child elements featured in the RollingFile node are:

- fileName: the location and the name of the log file to create and write to. The default location of the log file is: MULE_HOME/logs/<app-name>.log, which you may want to modify for on-premise solutions.

- filePattern: the name of the archived log file when the log file gets rolled — generated with a dynamic incremental value in the default configuration.

- PatternLayout: pattern attribute: Essentially, this is what dictates the format and presentation of the rolling log file. It is a combination of conversion specifiers (pre-fixed with ‘%’) and hard coded text. There is a great deal of scope for customisation here, with alternative values and additional conversion specifiers (refer to the official Apache documentation on the PatternLayout for further details), but the default configuration for PatternLayout’s pattern attribute is as follows:

pattern="%-5p %d [%t] [event: %X{correlationId}] %c: %m%n"

So what do these default conversion specifiers denote? Let’s inspect:

%p – the priority level of the logging event.

%d – the default format for the date and time.

%t – the thread name that generated the logging event.

%X – the thread that generated the logging event and the identifier of the message that was processed (correlationId).

%c – the name of the logger that published the logging event.

%m – the application supplied message associated with the logging event.

%n – line separator.

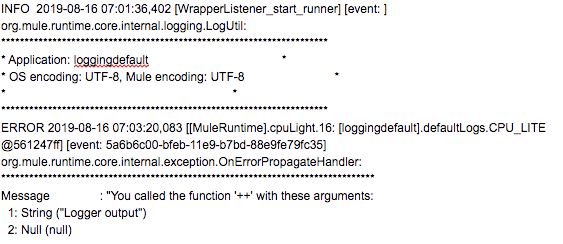

Here is how the default configuration for PatternLayout’s pattern attribute (shown above) renders the log file:

We will modify a few of the pattern attribute properties shortly, but the remaining default child elements in the RollingFile appender are:

- SizeBasedTriggeringPolicy: specifies the maximum size of the log file before rolling the file.

- DefaultRolloverStrategy: specifies configuration details for your archived log files. The default max=”10″ indicates that 10 archive files are created before the eldest archive file is deleted.

Modify the default log rendering

Now let’s address ‘how to change logged priority levels are output mule events and modify the default log rendering. One way to do this is to change the values of the existing conversion specifiers. Let’s change the interpretation of the priority level and date, as follows:

pattern="%level{WARN=W, DEBUG=D, ERROR=E, TRACE=T, INFO=I}

%d{dd MMM yyyy HH:mm:ss,SSS} [%t] [event: %X{correlationId}] %c: %m%n”

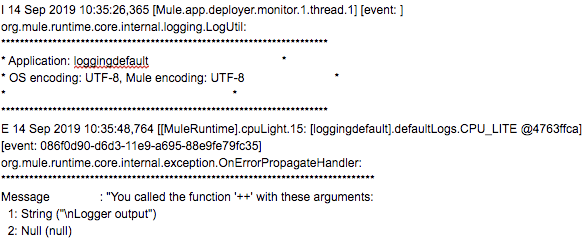

Here is how the modified pattern attribute now renders the same Mule event in the log file:

Note the abbreviated priority level, e.g. ‘I’ for INFO and ‘E’ for ERROR, and the modified date format.

Output log events as JSON

Let’s now address the question from earlier:

“How do we change the output data model of the log generator? It needs to be consumed by a 3rd party log management solution.”

This is particularly relevant for enterprise solutions that consist of multiple applications, numerous data repositories, and different operating systems. Such organizations might want to consolidate their logging data in one common logging platform.

Remember that Appenders are responsible for delivering log data to a destination, e.g. the console or a relational database. So let’s keep the RollingFile appender, but replace the PatternLayout element, with the JSONLayout element, thus:

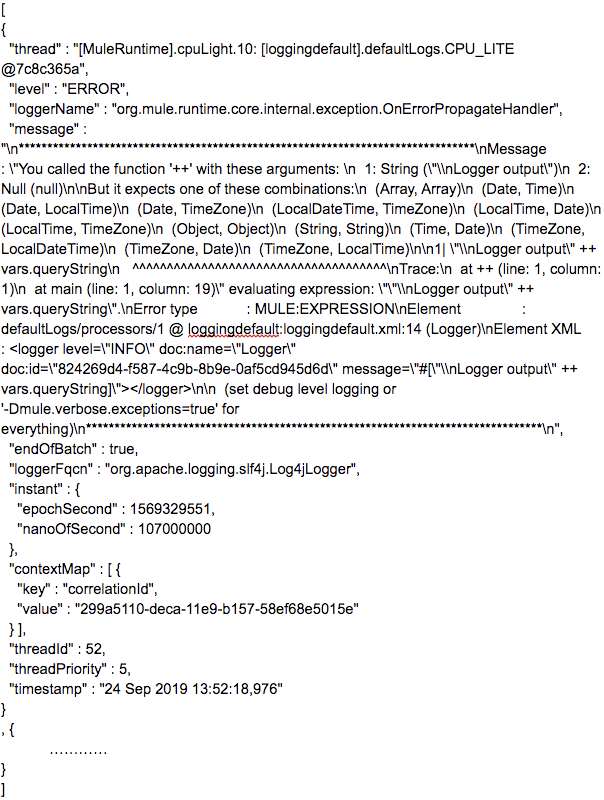

Here is an example of the output that the JSONLayout configuration generates based on the same error message displayed in the previous PatternLayout examples:

Note that almost all of the conversion specifiers used in the pattern attribute in a standard Mule project’s PatternLayout element are generated by default, the only ones missing are the timestamp and the correlationId. However my customizations above include: specifying values for some of the JSONLayout’s attributes, and adding additional fields to the JSON output, via a list of KeyValuePair elements.

The attribute values ensure that valid JSON is generated (a comma separated array) and that the contextMap containing the correlationId is output into an array. With the additional fields, I generate the timestamp with a date lookup. For good measure I’ve added another field using the context map lookup to retrieve the correlationId, as an alternative to providing it in the contextMap’s nested array.

Clearly there is a lot of scope here for logging customised JSON objects. This brings added benefits if you require external logging services to consume, analyze, and process your log data.

Conclusion

I hope that this blog helps you understand how to modify the properties in the default log4j2.xml file to render a different output format in the log file. That the log file is the default output location, but there are many other alternative log destinations. And finally, that the default data model of the output can also be customized.

As you can see, there are numerous options and possibilities when it comes to logging your Mule 4 application data, for more information check out our Configuring Logging document!