In part one of this blog series, we covered the robots driven to disrupt your business and online operations (DoS and DDoS attackers). In this sequel, we’ll step further into the robot universe and discuss often ignored scraper robots, along with mitigation strategies for dealing with the data leakage from these attacks that threatens your enterprise.

Bad actor # 3: Scraper attacks: An opportunity for value via alignment

Scraper attacks are a more intricate puzzle than a DoS or DDoS attack. Scrapers look almost identical, but require a more coordinated mitigation plan to navigate the impact. They are a challenging adversary — and not one to treat lightly. The first step is to identify scraper attacks and understand their underlying motives.

The difference between a DDoS attack and a scraper attack is that the scraper will not repeat the same request repeatedly in rapid succession. Instead, the scraper will make a single incremental change in each request. This could be incrementing a page number in a data set, incrementing a zip code to change to a different set of geographic data, changing a product ID to get a different set of product information, etc. The goal is to get your API to return “the next set of data” as it slowly fetches and makes its own copy of your data.

DoS, DDoS, and scraper attacks are not limited to APIs. All three types of robotic attacks target online applications of all kinds. Some examples include web sites and web applications, stand alone APIs, APIs that act as backends to web sites and applications, and APIs that act as back-ends to mobile applications. Scrapers are attracted to online data regardless of the delivery vehicle. Scrapers tend to attack APIs because the bot can easily sort through the responses to find what it’s looking for.

While every scraper is motivated to acquire your data, there are several types of scraper attack patterns that require individualized responses:

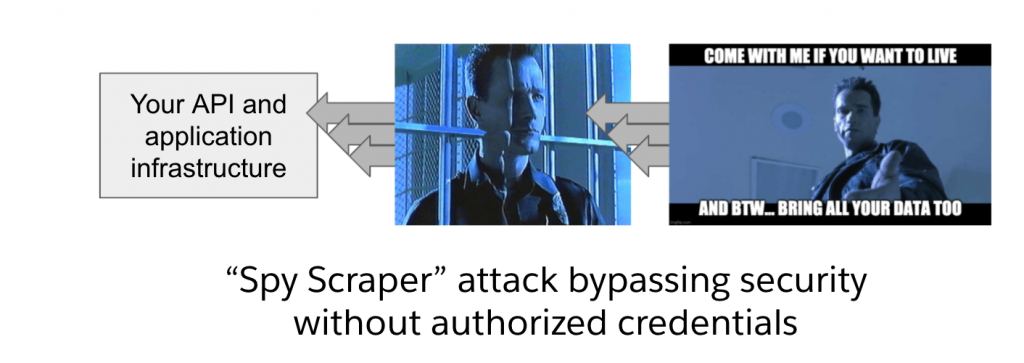

The spy attack:

When a scraper targets private user data (e.g. the Peloton breach) or semi-public user activity data (e.g. the Parler attack) the motive of the scraper is to collect information about your users.

If an API serves private data about a specific user, their buying history, or even their posts on your site, that is considered private data in the context of an API. While posts within a site are visible to other users, creating a copy of these same posts is a different story. This is considered private by the API standards because accessing the dataset should require user authentication prior to requesting that data. This segmentation is critical because the public set of data is core to your competitive context (i.e. the reason people engage with you online) and the other is private information that renders you liable if it is mishandled.

While you can’t stop these types of scrapers from mounting attacks, you can stop them from succeeding. Instituting a layered defense that includes modern API management practices along with a set of fail safes ensure all API requests for private data are only served to fully authenticated and authorized users. Ensure your development team implements an authorization verification mechanism at each layer of software to account for the circumstances where security measures for certain layers may have been compromised, skipped altogether, or failed without rejecting the request.

To drive this point home, share the Parler breach with your teams and show them how the first layer of authentication failed and the rest of the application infrastructure kept serving requested data about each user.

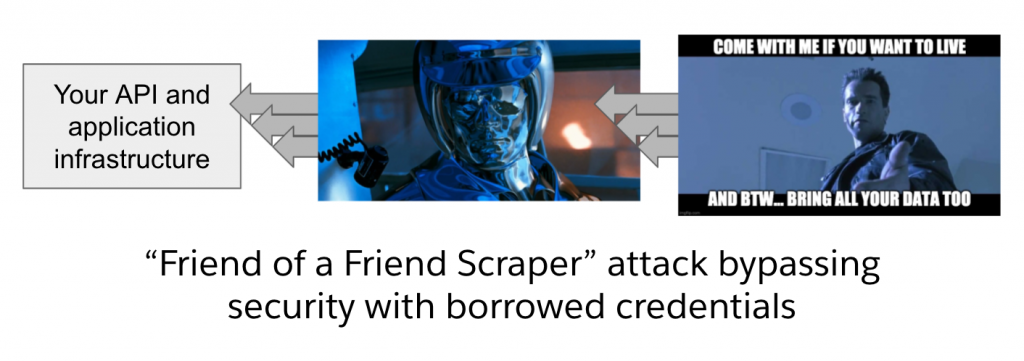

The “friend of a friend” scrape:

Not every scraper that targets private user data obtains the data by evading authentication. Some scrapers get access to data through social mechanisms. Just like how some Netflix customers share their passwords with close friends and family — your customers and partners often share their credentials with vendors and partners to use a functionality that your API or web experience lacks. Once a third-party partner has the credentials and a vision for what they can offer, they market this offering to other customers of yours and begin the process of disruption and disintermediation with your established customer base.

There are three ways to handle this type of scraper:

- Up your API product game and start to launch API products that meet your customer needs.

- Offer “brokered authentication” models to allow customers to grant usage permissions without sharing credentials (e.g. like how streaming services do with media devices, or like akoya does with banking sites).

- Try the courts, but your likelihood of success is highly dubious (just ask CDK and other Dealership Management System vendors in the U.S. automotive ecosystem space).

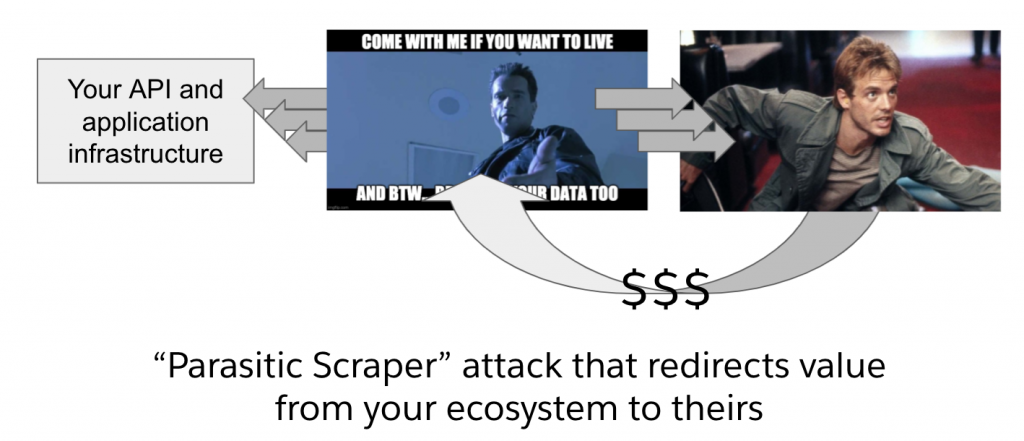

The parasitic attack:

When serving public data via API, many organizations (often unknowingly) use open APIs with anonymous access in a BFF pattern. These types of APIs are easy targets to a parasitic scraper because they do not require any form of authentication to call the API.

Parasitic scrapers operate in the world of data economics. They want your data and they’ll stop at nothing to get it. Attempting to block these types of scrapers is futile from an economic perspective because the value of the data acts as a beacon and your countermeasures only make the beacon brighter. Given that a successful countermeasure preserves the scarcity of your data, this scarcity increases its perceived value, making it more attractive to the attackers (who know how to adapt like their DoS and DDoS siblings).

One preventative measure for scrapes via a BFF API can be made at the architectural level. Rather than executing API calls at the edge via anonymous calls from users’ browsers, API calls can be executed server-side before experiences are sent out. This structure forgoes the distribution of API endpoints and credentials to browsers and allows for enterprises to restrict API calls to internal users via allow lists. Once this API attack surface is cleared of surreptitious activity, you can channel the data leeches to a governable API-specific channel to allow for a strategic response that will preserve your relevance and revenue.

Once an enterprise realizes that it can’t block the requests of scrapers (for the same reasons they can’t block the requests from DDoS), some organizations shift the front of the battle to the courts. Enterprises will reference copyright law and the “terms and conditions” published in their web offerings to attempt to get scrapers to “cease and desist” their data acquisition efforts. Organizations will even seed their real data with fake “watermark” data to track and prove “unsanctioned” use. Despite what many people believe, this has not been a successful tactic as multiple courts have found that scraping public data is not illegal. Some cases resulted in the courts ordering the target to not make attempts to block the scraper (e.g. LinkedIn vs HiQ, the previously referenced example with CDK).

An extreme response to parasitic scraping is corporate acquisition. Surprisingly, this response is not as uncommon as you might think. When business disruption and unbundling are severe enough, acquiring the scraper and their offerings (if you can identify them) is a legitimate response pattern employed by digital enterprises. One of the earliest examples of this response pattern was implemented by Twitter when they acquired TweetDeck and shifted their API policies and design to align their API offerings to their business strategy.

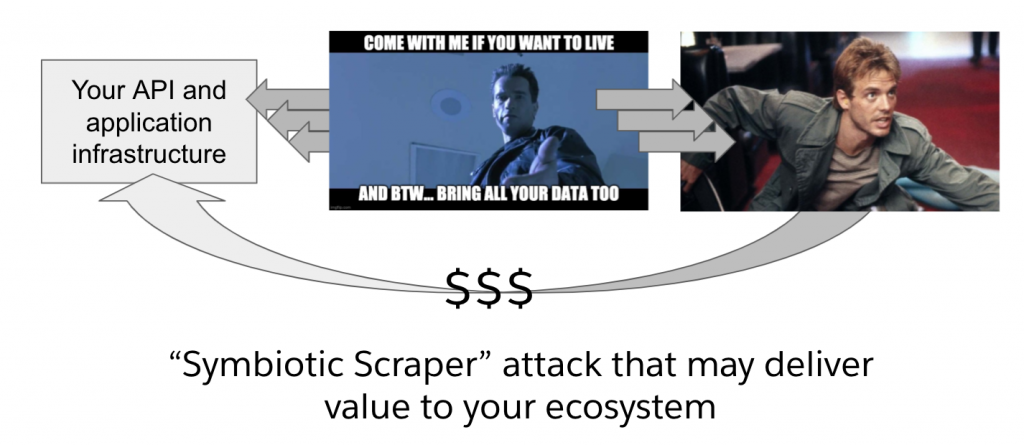

The symbiotic scrape:

Just when you think all robotic attackers are irredeemable ne’er do wells, along comes a strange variant that looks to form a symbiotic relationship with your offerings. The symbiotic relationship emerges when a scraper targets public data in an effort to leverage your data for an offering that (rather than competing with yours) enhances the value of your offerings in a form of affiliate marketing. For example, if Yelp! scrapes menu data off of a restaurant website, Yelp! isn’t competing with the restaurant. Yelp is creating a new channel to drive interested diners to the establishment. To determine if a scraper is symbiotic with your business, you’ll need to understand how your business model is monetized from a value-exchange perspective.

For example, if you operate a retail business and a scraper is collecting your product catalog and creating an aggregator that drives shoppers and other value-add partners to your offerings, that’s probably a good thing. BestBuy understood this years ago and reaped a windfall via a business-driven API strategy. To form a productive response to any scraper attack, you need to know how the scraped data will be used, how that will affect your revenue generation, and how you connect with customers and partners. With this in mind, you can develop an understanding of the attack and an approach to manage — and potentially monetize — the activity of the scraper.

Even in content marketing, media, and advertising contexts, different steps can be taken to align the interests of a scraper with the value stream of your organization. Just like the Twitter example above, Dan Jacobson’s groundbreaking API strategy work at NPR, and much more.

Knowing your robots is the key to writing your own future

The two factors to pay attention to here are:

- When a robotic attack happens, it’s often worthwhile to classify the attack context and drive the mitigation (or partnership) strategy based on what you learn. While nobody likes being attacked, what organizations like less than being attacked is leaving money on the table by needlessly blocking and punishing potential business partners and customers.

- In a new iteration of Wirth’s law, software becomes vulnerable faster than mitigations become available. It’s therefore critical to have an active posture (e.g., specialized penetration tests to identify actual gaps vs potential gaps) on monitoring the emergent threats (e.g., HTTP Smuggling) so that you can take action before you become the next breach headline story.

If data leakage and robotic attacks are something your organization has to manage, it is critical to have both the right tools to analyze the attack and its motive along with the right tools to mitigate it, manage it, or even potentially profit from it.