In my first blog, I explained what digital transformation is, why connectivity and integration is vital for a successful digital transformation, and how MuleSoft’s Anypoint Platform is an engine to power that digital transformation.

In this blog post, I’ll be using a common digital transformation initiative in the domain of digital customer experience (DCX) as an example: “Enhanced customer sales experience.”

Enhanced customer sales experience

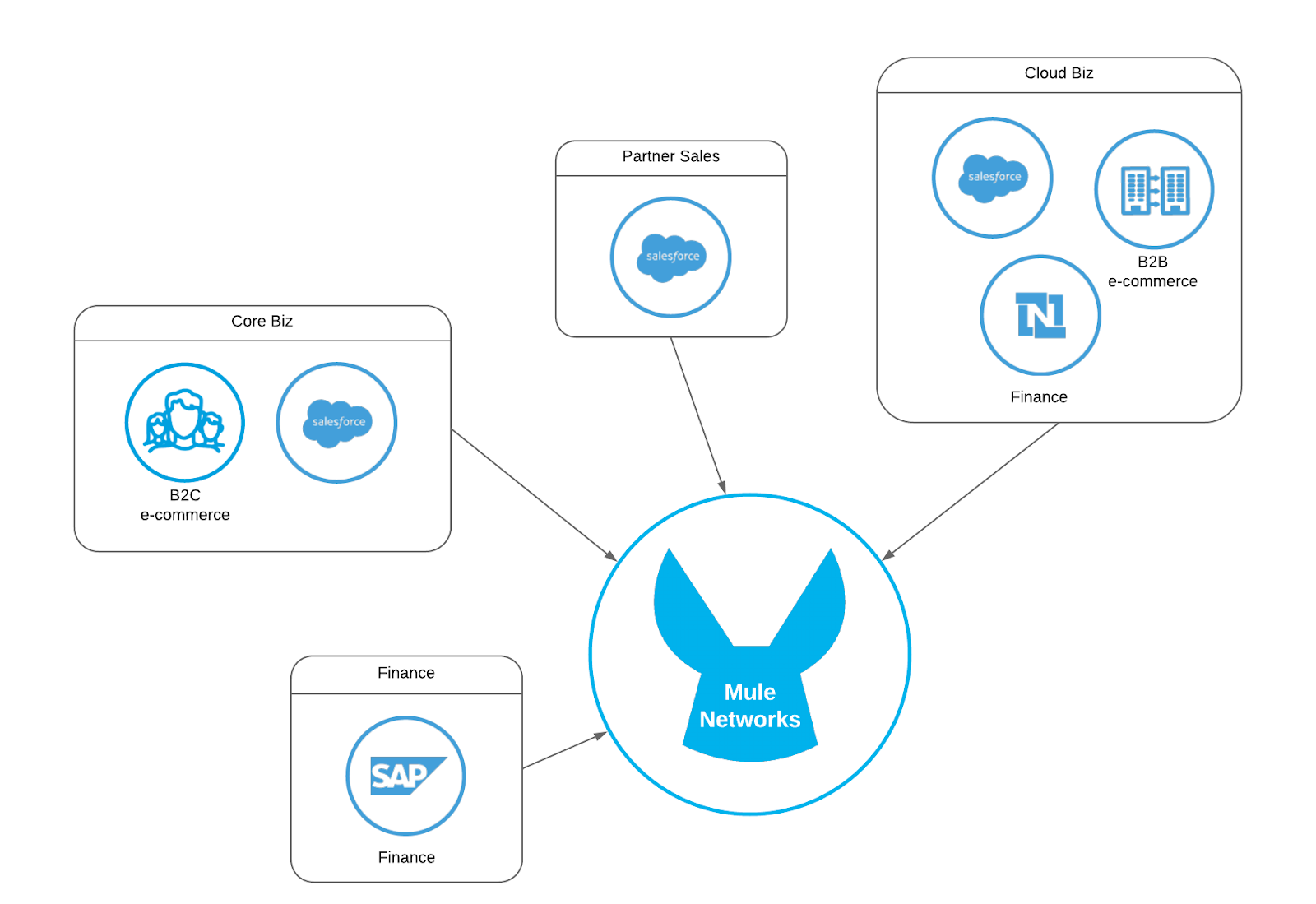

In this example, we’re using a fictional mid-sized networking company (Mule Networks) with years of M&A collateral. Mule Networks has the following application landscape for their sales cycle:

- Three Salesforce organizations (as a result of previous acquisitions).

- In-house and third-party finance systems (for different business groups).

- Two in-house eCommerce sites (for different business groups)

Below are Mule Network’s current pain points:

- Lacks end-to-end visibility of the customer – Different business groups work in isolation, only using and sharing data within their own systems, creating missed opportunities.

- Inconsistent customer sales experience – The sales team has non-standardized processes, and has customer data in multiple siloed systems.

- High maintenance costs and operational inefficiency – Mule Networks relies on legacy systems and sales teams need to duplicate the data in multiple systems.

- Switching systems is becoming cost prohibitive – Mule Networks wastes resources by connecting systems via point-to-point integration.

- Manual data entry is error prone and inefficient – Employees spend time transferring data between systems as Mule Networks lacks of process automation and data processing.

Mule Networks hires a new CIO, who tells the executive team the business needs a comprehensive digital transformation with the following expected outcomes and initiatives:

- Future-proof technology and system consolidation:

- Consolidate three Salesforce organizations into one.

- Cloud-first mindset for any new project.

- Legacy modernization:

- Move away from in-house development into enterprise-grade leading SaaS applications through phased migrations.

- Isolate and replace business logic embedded within monolithic systems using a phased approach.

- Enhance customers and omnichannel experience:

- Consolidate siloed customer data.

- Migrate in-house eCommerce to enterprise-grade leading SaaS application.

- Implement new eCommerce mobile app.

- Gain end-to-end customer visibility.

- Operational excellence and efficiency:

- Consolidate product SKU and pricing.

- Create end-to-end visibility and monitoring.

- Sales order process automation.

Connectivity at the center of every DX initiative

Some of these initiatives may sound familiar, what may not be so obvious is how each initiative needs integration. Below is an example of how each of these requirements map to a particular connectivity pattern or capability and how it should be supported by the DX engine:

Future-proof technology and consolidation:

- Consolidate three Salesforce organizations into one.

- Requirements:

- Provide single entry point to Salesforce: For better access control and security.

- Data Sync: When data needs to be sync to or from Salesforce to downstream systems.

- Patterns:

- API-led: When data from Salesforce needs to be consumed on-demand it should be exposed as a standardized API by the DX engine (e.g. Customer Portal will need on-demand customer data coming from Salesforce).

Data sync should be handled transparently by the DX engine (e.g. sales orders sync to downstream systems) by using one or a combination of the following patterns:- One-way data sync

- Two-way data sync

- API-led

- Event sourcing

- CQRS

- API-led: When data from Salesforce needs to be consumed on-demand it should be exposed as a standardized API by the DX engine (e.g. Customer Portal will need on-demand customer data coming from Salesforce).

- Requirements:

- Cloud-first mindset for any new project.

- Requirements:

- Connectivity platform needs to support new deployment models as well as existing legacy infrastructures.

- Capabilities:

- DX engine needs to support all deployment models:

- Cloud-native

- On-prem

- Hybrid

- Containers

- Service mesh

- DX engine needs to support all deployment models:

- Requirements:

Legacy modernization:

- Move away from in-house development to enterprise-grade leading SaaS applications through phased migrations.

- Requirements:

- Given the large legacy footprint, a full blown migration might not be cost-effective in the time frame its needed, thus systems need to coexist and data needs to sync between them.

- Patterns:

- Data Sync: Data needs to be synced transparently by the DX engine (e.g. sales orders sync to downstream systems).

- API-enable legacy systems: When legacy systems don’t offer APIs (for data consumption or data generation) the DX engine should provide such capabilities to be embedded with minimum effort by the legacy system.

- Requirements:

- Isolate and replace business logic embedded within monolithic systems using a phased approach.

- Requirements:

- Prevent monolithic systems from growing beyond their current capabilities.

- Patterns:

- API-led: Allow isolating and extracting functionality, so monolithic systems can be replaced with more modern systems.

- Requirements:

Enhance customers and omnichannel experience:

- Consolidate siloed customer data.

- Requirements:

- Customer data consolidation will be achieved by defining systems of record and data aggregation strategies.

- Patterns:

- Data Sync: When data needs to be sync between systems it should be handled transparently by the DX engine (e.g. Contact sync to downstream systems).

- API-led: The DX engine will provide standardized APIs with data aggregation capabilities to provide a single view of the customer (e.g. Customer 360 API).

- Requirements:

- Migrate in-house eCommerce to enterprise-grade leading SaaS application.

- Requirements:

- Migrate to new eCommerce application while minimizing end-user (customer) experience. This will need data migration and proper access to existing source systems.

- Patterns:

- API-led: The DX engine will provide standardized APIs with data aggregation and system access capabilities to provide the necessary data to the eCommerce sites (e.g. Orders API).

- “Light ETL:” As any migration, existing data will need to be extracted, transformed, and loaded into the new systems. The DX engine should handle scenarios with “light” volume data sets without the need of heavy appliances or learning new tools. (e.g. Migrating user accounts).

- Requirements:

- Implement new eCommerce mobile app.

- Requirements:

- New eCommerce mobile app will need on-demand access to existing data sources.

- Patterns:

- API-led: The DX engine will provide standardized APIs with data aggregation and system access capabilities to provide the necessary data to the eCommerce sites (e.g. Orders API).

- Event-driven: Using events for near real-time data sync as opposed to overnight batch processing.

- Requirements:

- End-to-end customer visibility.

- Requirements:

- We need to have a 360 view of the customers from where they are in their journey.

- Patterns:

- API-led: The DX engine will provide standardized APIs with data aggregation capabilities to provide a single view of the customer (e.g. Customer 360 API).

- Requirements:

Operational excellence and efficiency

- Consolidate product SKU and pricing.

- Requirements:

- Defining systems of record for product and pricing will require the data to be synced back to downstream systems.

- Patterns:

- Data Sync: When data needs to be sync between systems it should be handled transparently by the DX engine (e.g. Product sync to downstream systems).

- Requirements:

- End-to-end visibility and monitoring.

- Requirements:

- Business and operations need full visibility across the entire stack.

- Capabilities:

- DX Engine needs to provide a single pane of glass for end-to-end monitoring

- Cloud-native

- On-prem

- Hybrid

- Containers

- Service mesh

- DX Engine needs to provide a single pane of glass for end-to-end monitoring

- Requirements:

- System-to-system process automation.

- Requirements:

- Different processes will be triggered by different means. They will need to hook into these different mechanisms for automation.

- Capabilities:

- DX engine needs to support different trigger mechanisms for proper business automation such as:

- Events

- Schedulers

- Polling

- APIs

- DX engine needs to support different trigger mechanisms for proper business automation such as:

- Requirements:

By now, it should be clear how connectivity is an intrinsic part of everything Mule Networks needs to be successful, so next we’ll summarize the most important patterns, capabilities, and strategies we see on every DX initiative and demonstrate how the Anypoint Platform is uniquely positioned to handle every need.

Engine capabilities

Let’s start with the set of “must have” capabilities for any DX engine to fully support your digital transformation initiatives and how the Anypoint Platform aligns with these capabilities.

Multi deployment support (Mule Runtime):

A DX engine needs to support your existing IT landscape and adapt with it. Therefore at minimum it should support:

- Cloud-native

- On-prem

- Hybrid

- Containers

- Service mesh

More importantly, the application code should be portable to any deployment model so that you can truly “build once, deploy anywhere.”

Complex data transformation (DataWeave):

To support transforming data into every system format and structure with different levels of complexity, we need a level of abstraction that simplifies such type of work. The Anypoint Platform provides a transformation language designed from the ground-up to support such scenarios.

Process trigger capabilities (Mule Runtime + Connectors):

The work of a DX engine should be as seamless as possible. Therefore, it should offer different trigger capabilities that allows for data to be moved regardless of how the data is exposed. For example:

- Messaging

- Polling

- Notifications

- Events

Single pane of glass (Anypoint Platform):

This capability gives the operations team to full control and visibility of the DX engine without compromising by having a single access point to manage everything on the platform.

Connectivity patterns

The following are a few of the most relevant patterns in the context of digital transformation. They not only leverage the capabilities we just explored but also MuleSoft IP gathered throughout years of integration experience.

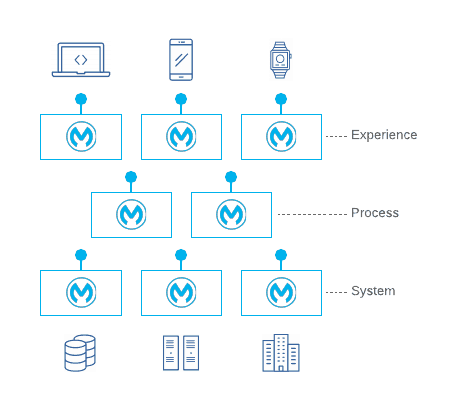

API-led

API-led connectivity is an integration approach for connecting and exposing assets through purpose-specific APIs categorized into three different layers: System, Process and Experience.

Benefits:

- Standardized data access

- Maximize reuse

- Bi-modal IT with agility

- Transition to cloud

- Efficient scalability

- Future-proof

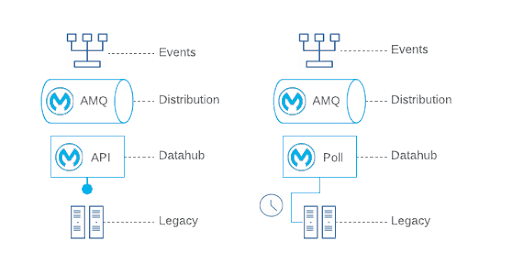

Event-enable legacy

Legacy and in-house applications are usually not build with eventing capabilities that would allow for easier data distribution to interested systems. Thus, this patterns allows to “event-enable” such systems by either:

- Exposing an API to be called by the system (intrusive)

- Polling system database or file system (non-intrusive)

Benefits:

- Enable event-driven architectures for distributing data across multiple systems.

- Bite-sized “legacy modernization” by allowing legacy and in-house applications to be part of modern evented architectures.

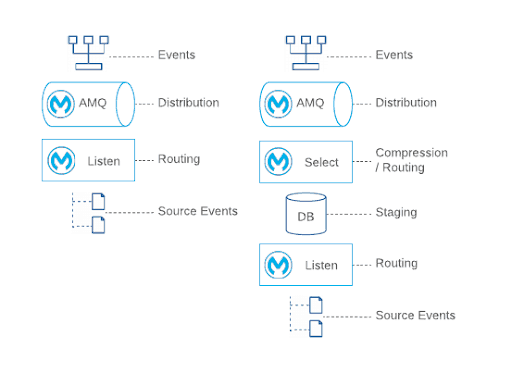

Event data hub

Assuming event-capable source systems, the following are common variations and challenges we see:

- Structured events with adequate frequency → Routing only

- Structured events with high frequency → Stage + Compress + Routing

- CRUD events with high frequency → Stage + Compress + Enrich + Routing

Event data hub is a pattern that allows to build a message distribution framework that adjusts to the needs of the source event system and standardizes event distribution.

Benefits:

- Extensible support for evented systems.

- Standardized message distribution.

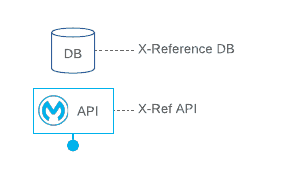

X-Reference API

A common pattern required for system to system data sync that enables:

- Cross-reference data sets (e.g. Data translation from system A to B) for dynamic mappings.

- Cross-reference ID generation for multi-source data objects.

Light batch processing

Even though batch processing can be considered a niche appliance-oriented requirement, frequently the data volumes are trivial enough to fall into the scope of “Light Batch Processing” and as such, they should be handled by a DX engine.

Benefits:

- No need to learn additional tooling

- Lower complexity

- Same relatable integration concepts

- Leverage rich connector and data transformation capabilities

Data sync strategies

At the heart of most DX initiatives is the need to move data to support a phased system migration or to make data distribution more escalable. These needs can be covered in many different ways (including point-to-point one-off integrations), but the following are two typical strategies used when those requirements arise. The main thing to keep in mind is trying to clearly define the notion for “System of Origin” vs “System of Record.”

- System of record: Authoritative data source for a given data element (entity) or piece of information.

- System of origin: System that originates an entity record (where they get created). Ideally should be the same as the system of record but often, especially during migration/coexistence phases, there can be more than one which increases data sync complexity.

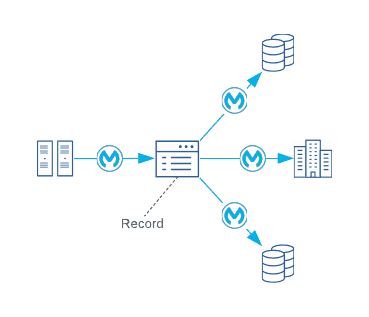

One-system of origin

In this scenario, a particular entity (e.g. Sales Order) originates and is maintained exclusively in one system of record and data flows downstream in a one-way fashion to other systems. This type of data sync, also known as “happy path data sync,” is typically achievable when organizations are embarked in a process optimization initiative and there is upper management sponsorship to enforce certain rules across different systems (e.g. enforce single system of origin as opposed to “any system can create sales orders”).

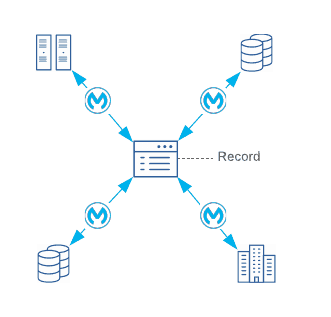

Multi-system of origin

This is the most complex (and common) scenario for data sync. Often times data may be originated from different systems. In such scenarios, an organization has to make a best effort to:

- Define a single system of record.

- Define deterministic matching rules to match a record originating in one system to potential duplicates in other related systems.

- Define fuzzy matching rules to complement edge cases of duplicate identification.

With the previous steps in place, organizations can implement a cross-reference pattern to define “integration external ids” and data lookups to sync data across systems.

In the next blog post we are going to expand on these patterns and strategies providing scenario-driven examples and how each would be implemented leveraging the Anypoint Platform.

For more use cases for the Anypoint Platform, see the customer stories section of our website.