Download our Best Practices for Microservices whitepaper to gain a deeper perspective about our approach to microservices written in this post.

If IT today has a watchword, that word is “speed.” At the same time, IT is expected to protect the organization’s crown jewels (company finances and private customer records) and to keep services available and responsive. The message: Go faster, but don’t break anything important. Even Facebook has modified its motto on this front from “Move fast and break things” to “Move fast with stable infrastructure.”

In response to these pressures, enterprise IT teams are increasingly adopting the techniques in use at cutting-edge web-scale companies like Amazon, Google, Netflix, and Facebook, characterized as microservices. Simplistically, microservices attempt to take slow-changing, monolithic functionality and break it apart into many small pieces that can be changed independently of one another, with the traditional change management role moved away from “command and control” into a highly automated deployment model that often resembles barely controlled anarchy. While this approach can look chaotic, it is reinforced by strong rigor around safety of change and responsiveness to the user.

Taken at face value, the microservices approach seems like a logical method to evolve a traditional enterprise architecture to satisfy the market pressures of speed. Deconstructing monolithic architecture into smaller, independent pieces seems to many organizations like a natural evolution of SOA. In many regards, this is true, but it can lead to putting the focus on the wrong areas.

SOA lessons learned

One of the major challenges enterprises faced when attempting to gain value from the SOA approach was a tendency to overthink the design of services and to become paralyzed by debates about service granularity and adherence to pure architectural principles. While the design aspect of SOA was important, it caused many organizations to fail to deliver on the promises of more efficient IT delivery, while they centered on the early design stages and had to rush through the operational aspects. Today, as teams adopt microservices, operational considerations are once again overlooked.

A significant amount of the informational material available around microservices focuses on issues like “what is a microservice,” how to design one (often using techniques like domain-driven design), and whether or not something “is” a microservice. In my experience, the design of a microservice is the least interesting part of the approach. The behaviors are far more important. After all, it is not the design of a microservice that makes it interesting; it is the fact that it allows teams using the approach to deliver new features on an hourly or daily basis, rather than the relatively glacial paces that characterize traditional techniques. Does it matter if a microservice is “perfectly” designed if you can only push it into production once a week?

Realizing this means we can look at microservices from a different, more practical perspective. We should consider what aspects of the approach are contingent in enabling safe, predictable hourly updates. Automated testing and deployment are obvious and essentially mandatory. It may be plausible to manually test and deploy microservices when you only have one or five of them. But the nature of the approach means that with success comes hundreds or thousands of microservices, and introducing human error in the deployment process is simply infeasible.

Similarly, when dealing with such a large number of interrelated components, all of which depend on shared supporting services like interprocess communications, security, and monitoring, a “happy path” approach to testing the system doesn’t cut it. With any system this complex, all we know for sure is that things will break, they’ll break often, and they’ll often break in unpredictable ways. This observation leads successful microservice teams not only to focus on building microservices in defensive ways, but also to introduce frequent deliberate breakages in environments in order to confirm that the systems and teams are capable of resolving any problems rapidly and effectively. Netflix’s famous Simian Army is a concrete example of such an approach.

Designing for failure is fundamental to the success of large-scale microservice deployments like Netflix or Google. However, those organizations typically don’t need to address another key challenge that enterprises adopting microservices must face: providing a stable, safe interface between the high-scale, highly complex, and rapidly evolving microservices environment and the existing enterprise assets that underpin the business. For an established enterprise, there must be a secure and manageable solution to connect microservices with the massive business value embedded within its monoliths.

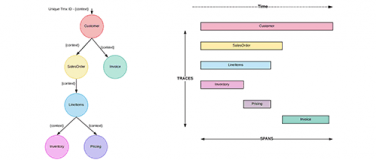

The complexity of a large-scale microservices architecture cannot be overstated. In many cases, these organizations cannot even draw a high-level architecture diagram of their deployment. Instead they have to resort to automatically generated dependency diagrams, colloquially referred to as “Death Star” diagrams because of their amusing resemblance to the titular planetary destroyer. How can enterprise developers navigate this complexity, and at the same time bridge the gaps between these modern services and traditional systems? The answer, of course, is APIs.

APIs to the rescue

At MuleSoft, we understand the value of using APIs to provide a manageable interface between IT assets that work at different speeds — what Gartner has referred to as “bimodal IT.” Well-managed APIs provide a robust option to manage the different speeds of change happening on both sides.

In a microservices approach, managed APIs are not only necessary, they areassumed. When you look closely at the Death Star diagrams, you can see that the fundamental interconnect point is the API. It becomes possible to visualize these complex networks of logical dependency and impact only because each microservice exposes a well-known, versioned, and managed API endpoint. When APIs are managed effectively, they help ensure safe changes, regardless of the speed of deployment exhibited by the components on either side of the API contract.

APIs can also be used to define and manage your microservices. At MuleSoft, for example, we use a framework called Khartos to simplify the development of our Node.js-based microservices. Khartos essentially forms an opinionated microservice container that exposes the microservice contract as an API specified in RAML, the open, easy, and reuse-centric API specification language, and employs dependency injection to automatically configure key aspects of the microservice to naturally “plug in” to our platform services. This means the developer can define the microservice “API first” without any overhead, speeding up the development cycle while also getting a lot of capabilities “for free.”

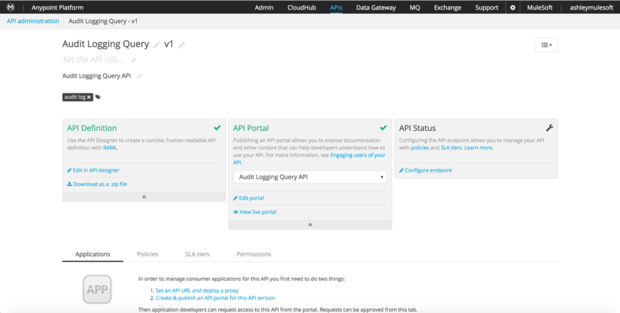

MuleSoft’s Anypoint Platform for APIs puts this same power into the hands of enterprise teams, allowing developers who are working on traditional monolithic stacks or microservice architectures to design, manage, and collaborate around APIs regardless of the speed of change. Essentially developers are able to build application networks using APIs to connect applications, data, and devices, making them pluggable and reusable.

One of the benefits gained from managing microservices via APIs is achieving lightweight, developer-friendly governance, in particular around:

- Service discovery and documentation: API portals provide self-service documentation, consoles, SDK client generation, and programmable notebooks to allow developers to discover and learn how to consume the API for a microservice.

- Fault tolerance: API gateways proxy RESTful communication between microservices, providing a central point to ensure that policies, like security and throttling, are correctly applied across all microservices.

- Quality of service: Cascading failure is a risk with microservices architecture. Mule runtimes deployed as API gateways can act as “circuit breakers” to quickly detect and isolate services from cascading failure.

- Security: Security should be part of the development process, not a feature that developers have to remember to repeat. API gateways provide out-of-the-box policies for security, including OAuth 2.0, to ensure that the correct security policies are applied to a set of APIs without the developer having to think too hard about it.

Managing microservices via APIs has two important benefits: On one hand, you provide tooling to make it easy to adopt good practices (such as Khartos), while on the other hand you use API management to ensure policies are applied consistently and correctly without having to manage many versions of client libraries in the microservices themselves. This further reinforces the approach of “move fast, but with stable infrastructure.”

Governance can be further enabled through automation and continuous delivery, which ensures the application of correct standards in a rapid and seamless manner.

Continuous delivery of composed applications

Getting teams into a habit of continuously releasing software aligns with agile development principles. In terms of microservices applications, continuous delivery also means continuous integration. In applications that are composed of many services, it is critical to ensure that the composition actually works when the software is built. MuleSoft’s Anypoint Platform fully integrates into a continuous delivery strategy, both for the implementation and management of API-driven microservices:

- API-defined and event-driven microservices built with Anypoint Platform can be declaratively tested with the MUnit framework, MuleSoft’s automated integration and unit testing framework.

- Mule applications are agnostic to version control systems and use Maven as their underlying build system. As a result, Mule application builds can integrate with virtually any automated build system, including Jenkins and Bamboo. Testing and application analytics services like TestFlight and Crashlytics are similarly easy to integrate into the Mule SDLC (software development lifecycle).

Operational automation is crucial in achieving continuous delivery. MuleSoft’s SDLC framework integrates building, packaging, deploying, and managing Mule microservices into a variety of deployment ecosystems.

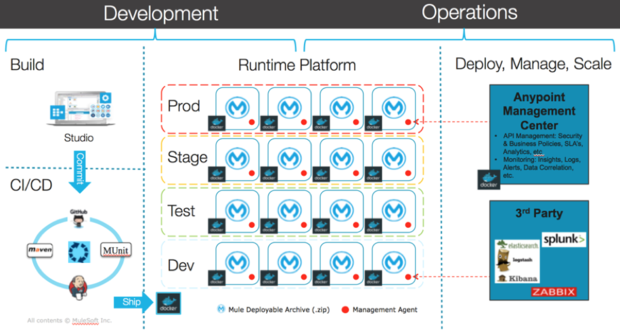

Deploying container technology

IaaS frameworks, such as Chef and Puppet, and virtualization technology, such as VMware and Xen, can greatly accelerate the deployment of monolithic application stacks. Recent advances in container technology, particularly frameworks such as Docker and Rocket, provide the foundation for consistent, repeatable deployment of applications without necessarily provisioning new physical or virtual hardware. Numerous benefits arise from this deployment paradigm, including increased compute density within a single operating system, trivially simple autoscaling, and container packaging and distribution.

These advantages make containers the ideal choice for microservice distribution and deployment. Container technologies follow the microservice philosophy of encapsulating a single piece of functionality and doing it well. They also give microservices the ability to scale elastically in response to increasing demand.

However, there is never a free lunch. Managing a container ecosystem comes with its own challenges. For instance, containers running on the same host must be bound to ephemeral ports to avoid conflicts; container failure must be addressed separately; and containers need to integrate with front-end networking infrastructure such as load balancers, firewalls, and perhaps software-defined networking stacks. Platform-as-a-service technologies, such as Pivotal Cloud Foundry and Mesosphere DCOS, are emerging to address these needs.

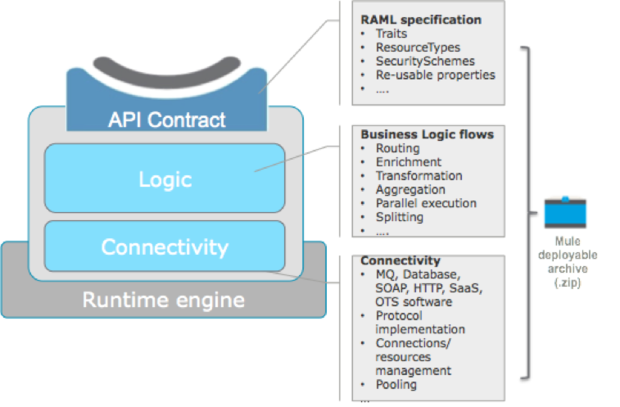

Anypoint Platform’s runtime components support a variety of deployment mechanisms, ranging from traditional, multitenant, cluster-based deployment to a Mule worker packaged and deployed as a container in a PaaS framework. Mule runtimes deployed as API gateways can be similarly packaged and deployed as proxies for a single microservice or, more commonly, deployed as an infrastructure component of a PaaS to govern all microservice API interaction. The Anypoint Platform runtime (aka Mule) has the following attributes that make it a perfect fit for containerization:

- The runtime is distributed as a standalone Zip file, with a JVM being the only dependency.

- It runs as a single, low-resource process. For instance, it can easily be embedded in a resource-constrained device like a Raspberry Pi.

- It has no database or data storage requirements. Logging can be configured to standard output and standard error.

The Mule runtime can execute a microservice application artifact. The application artifact is a self-contained Zip file that contains all the required libraries and configuration. This makes it easy to bundle or layer runtime installation and application artifacts as a single container image.

When running in a microservices model, the Mule runtime and application are combined in a container. The Mule application container image can be layered atop the Mule runtime container image. Each service is a thin layer atop the runtime container, overriding MULE_INSTALL_DIR/apps. In this model, the Mule microservice lifecycle (start/stop) is bound natively to the container’s lifecycle, making it easy to work with container orchestration management systems.

The Anypoint Runtime Manager provides a single operational plane to manage both the microservice implementations built with the Anypoint Platform and the API gateways. The Anypoint Runtime Manager can either be hosted by MuleSoft or deployed as part of the management layer of a PaaS infrastructure, providing an operational API to orchestrate microservice deployments, API monitoring, analytics-based promotion, and other concerns.

There is no question that microservices are here to stay. The benefits of rapid, safe microservices deployments are too great to ignore. However, no approach is the right fit for all use cases. One of the major challenges will be learning to mix and match microservices with the many other architectural styles in the enterprise.

Enterprises must also learn to manage the speed and flexibility that microservices enable and the complexity that microservices create. Microservices encourage much more frequent iterations than traditional applications, with production changes in the tens to hundreds a day being common. The large numbers of microservices that make up successful deployments require an effective, automated means to manage them. Finally, few enterprises can completely replace their existing assets and investments with all-new, microservice-based architectures, so a coexistence strategy is required.

Managed APIs provide an effective, standardized option to manage microservices at scale, while also supporting and leveraging the rest of the enterprise’s assets. The value of APIs as the practical means to manage microservice complexity in the composable enterprise has never been clearer.

To read more about microservices, check out our Best Practices for Microservices whitepaper for design principles and how Anypoint Platform can help you implement microservices best practices in your organization.

This post originally appeared in InfoWorld on August 25, 2016.