Welcome back! Following the series about new batch features in Mule 3.8, the second most common request was being able to configure the batch block size.

What’s the block size?

In a traditional online processing model, each request is usually mapped to a worker thread. Regardless of the processing being synchronous, asynchronous, one-way, request-response, or even if the requests are temporarily buffered before being processed (like in the Disruptor or SEDA models), servers usually end up in this 1:1 relationship between a request and a running thread.

However, batch is not an on-line tool. It can do near real-time, but the fact that all records are first stored in a persistent queue before the processing phase begins disqualifies it from that category. Not surprisingly, we quickly realized that this traditional threading model didn’t actually apply to batch.

Batch was designed to work with millions of records. It was also designed to be reliable, meaning that a transactional persistent storage was needed. The problem is that if you have 1 million records to place in a queue for a batch job that has 3 steps, in a best case scenario you’re looking at 3 million I/O operations to take and re-queue each record as it moves through the steps. That’s far from efficient, so we came out with the concept of block size. Instead of queueing each record individually, we queue blocks of 100 records, reducing the I/O overhead at the cost of higher working memory requirements.

I/O was not the only problem though. The queue -> thread -> queue hand off process also needed optimization. So, instead of having a 1:1 relationship between each record and a working thread, we have a block:1 relationship. For example:

By default, each job has 16 threads (you can change this using a threading-profile element)

- Each of those threads is given a block of 100 records.

- Each thread iterates through that block processing each record

- Each block is queued back and the process continues

Again, this is great for performance but comes at the cost of working memory. Although you’re only processing 16 records in parallel, you need enough memory to move a grand total of 1600 from persistent storage into RAM.

Why 100?

The decision of having 100 as the block size came from performance analysis. We took a set of applications we considered to be representative of the most common batch use cases and tested then with records which varied from 64KB to 1MB, and datasets which went from 100.000 records up to 10 million. 100 turned out to be a nice convergence number between performance and reasonable working memory requirements.

The problem

The problem with the design decisions above came when users started to use batch for cases which exceded the original intent. For example:

- Some users like the programming model that batch offers, but don’t really have a ton of records to process. It then became usual to hear about users that are trying batch with as little as 200 records or even less than a hundred. Because the block size is set at 100, a job with 200 records will result in only 2 records been processed in parallel at any given time. If you hit it with less than 101, then processing becomes sequential.

- Other users tried to use batch to process really large payloads, such as images. In this case, the 100 block size puts a heavy toll on the working memory requirements. For example, if the average image is 3MB long, a default threading-profile setting would require 4.6GB of working memory just to keep the blocks in memory. These kind of use cases could definitively use a smaller size

- And finally, there’s the exact opposite of the case above: jobs that have 5 million records which payloads are so small you could actually fit blocks of 500 in memory with no problem. A larger block size would give these cases a huge performance boost.

The solution

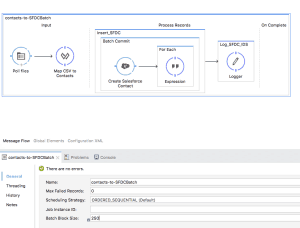

The solution is actually quite simple. We simply made the block size configurable:

As you can see in the image above, the batch job now has a “Batch Block Size” property you can now configure. The property is optional and defaults to 100, so no behaviour changes will be seen by default.

The feature is actually quite simple and powerful since it allows to overcome all the above cases. It has only one catch: you actually need to understand how block sizes work in order to set a value that actually helps. You also need to do some due diligence before moving this setting into production. We still believe that the default 100 works best for all cases. If you believe that your particular case is best suited by a custom size, make sure to run comparative tests with different values in order to find your optimum size. If you’ve never perceived this as a problem, then just keep the default value and be happy.

See you next time!