Reading Time: 8 minutes

This post brought to you by Christopher Jay. The original article can be found on The Australian Financial Review.

In the ever-evolving world of the internet, transferring data between different internet locations and organisational databases is becoming a lot easier with the dramatic spread of a particular set of software modules designed to standardise routine connection tasks.

In previous times, a lot of laborious effort in connecting various systems has been done with hand-built strings of software – basically re-inventing the wheel in different shapes, sizes and colours.

Enter a 21st-century set of software companies specialising in providing standardised tools for connecting different applications, data and devices using common software sets to eliminate the endless duplication of effort.

This involves the familiar concept of application programming interfaces, or APIs. These are bundles of separate routines, protocols, security software and software assembly tools designed to interact with computer devices, internet communication systems and organisational databases to speed up and simplify communications.

A conversation about the fabulous new opportunities these approaches are providing is typically peppered with references to particular APIs and the huge advances in convenience and services they are providing.

From a government point of view, there are three major implications for communications and internet policy.

Integration

The first is the need to ensure the internet specialists in each public-sector department and agency are thoroughly briefed on advances in connection APIs which can allow big improvements in the range of services from government sources, and refinement of operating procedures for computer service installations.

The second is continuing effort to extend the effectiveness of security systems to counter the constantly expanding activities of foreign governments, criminal enterprises and local computer pests bent on information theft, monetary fraud and operational disruptions, such as distributed denial of service attacks.

The third is steadfastly maintaining or even increasing the rate of broadband modernisation to cope with the sizeable increases these spreading connection or Web APIs will bring to overall levels of traffic.

The current, slightly bizarre Wi-Fi arrangements, with their melange of multiple possible carriers, anarchic chaos of access passwords to systems some of which charge, and many of which don’t, the whole thing often clogging up in the evenings when the computer-games-playing crew cut in is no way to run a long-term, fast internet system in a high-tech economy.

An example of how web APIs can work would be a social interaction or business presentation exercise which can incorporate photographs, video clips, verbal material, print or graphical presentations, user information, dynamic live feeds (for example, from a conference, speech or broadcast) and environmental information, such as current temperature, wind speed and humidity.

All this has been notionally possible for some time, using the previously-developed set of routines. But the practical difficulties and impediments to date are readily apparent in the constant complaints on social media about links that don’t work, video that doesn’t play, audio that stays silent and presentations that run in fits and starts.

Catalyst

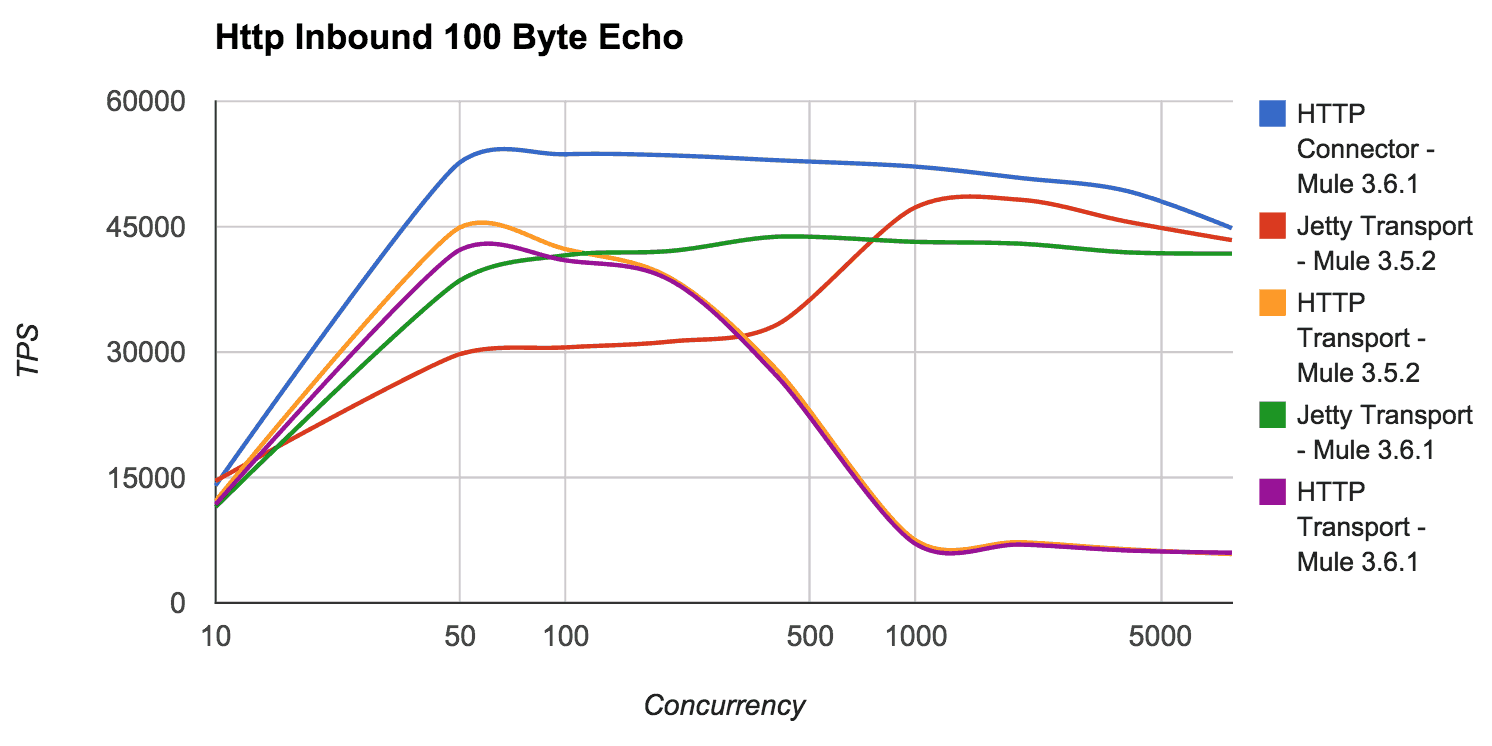

An example of the current set of Web API proselytisers is the San Francisco-based multinational Mulesoft, founded by Ross Mason as recently as 2006 and now running to nearly 500 people with the usual string of additional offices in Atlanta, New York, Buenos Aires, London, the Netherlands, Munich, Sydney, Hong Kong and Singapore.

Instead of custom-coding by hand, Mulesoft developed platforms which provided a range of pre-prepared functions which could be quickly and efficiently strung together to provided desired connections.

“Mobility, cloud, big data and the internet of things are transforming business and creating new opportunities,” Mulesoft company material points out.

“Yet companies are only starting to tap the vast potential. To truly realise the promise of this new era, these disparate technologies must all be connected.

“APIs are the catalyst for this change, unleashing information and eliminating the friction of integration for unprecedented speed and agility.”

These big advances from the newly-emerging web API companies will be particularly important in coping with the rapid continuing spread of interactions between central databases and mobile equipment in the field, or even in the office, in the form of smartphones, tablets and laptop computers.

Chief Technical Officers implementing improved web communications need to distinguish between simple publication of standardised data (for example, train timetables, city populations, addresses) and applications where the device, or the user separately, needs to be identified and authenticated as cleared for the relevant access.

Read the entire article on The Australian Financial Review »